Composer is a writing tool that is designed for English-language learners who would like to improve their English writing skills. The tool is intended to provide users the options to practice and receive AI feedback and scaffolding on-demand that provide actionable information to inform future learning activities outside of the classroom.

My RoleI led the UX Research and Design of Composer as a UX lead and individual contributor.

Experience Strategy: I partnered with one producer and one product owner to uncover insights and translate concepts into features that address users’ writing behaviors and learning motivations.

Planning & Product Vision: I defined the product with my product owner and other stakeholders. I evangelized product vision, team members' personal goals and balanced business goals. I prioritized and negotiated features for launch and beyond.

Research Strategy: I led the UX research activities including drafting research plans, composing research protocols, strategically executing discovery research and usability testing. I analyzed the findings and provided actionable user engagement insights to influence decision making.

Design Execution & Validation: I executed journeys, wireframes, prototypes and design specs, translated user insights and business needs into a prototype.

My teamETS Foundry was established as a pioneer and an incubator to ideate and develop solutions for our organization.

In 2019, our team was assembled to explore how to convert ETS’s cutting-edge NLP (Natural Language Processing) capabilities into a market-facing solution.

TIMELINEComposer is the first project that I led from end to end at ETS Foundry. We spent over 5 months (03/2020-07/2020) building this prototype.

How do we start the innovative journeyThis project was handed to our team as a very open task. At the project kickoff, we learned that our company’s NLP (natural language processing) Lab has some AI capabilities to process essays and can produce feedback reports on writing English. In the past, our company used these capabilities to grade the famous TOEFL test and some research projects.

IDENTIFY THE PROBLEMEnglish language learners in China struggle to find options to practice and improve academic English writing skills efficiently and effectively. They do not enjoy English writing (yet) and find it is hard to persist in writing practice outside the classroom. Meanwhile, they feel frustrated about how to improve English writing without receiving actionable and personalized feedback. These frustrations limit their academic and career development.

The solution

Provide on-demand practice of writing in English with automated feedback and scaffolding that communicates specific areas of strength and growth for the process and compositions of Writing. Also include motivational elements to promote engagement (e.g., autonomy and relevancy), persistence and self-efficacy.

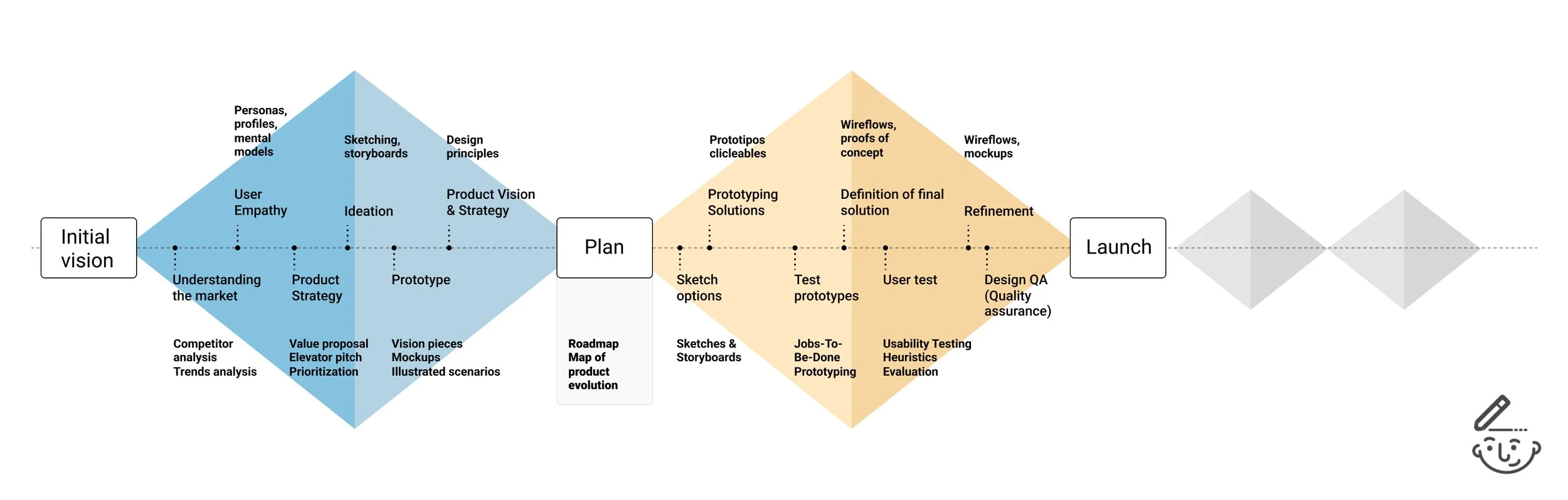

Design Process

DiscoverLiterature Review

Interviews

Empathy Mapping

User Journey

Problem Statement

DefineParticipatory design

Buy-a -feature

Concept Testing

User Flow

Mood Boards

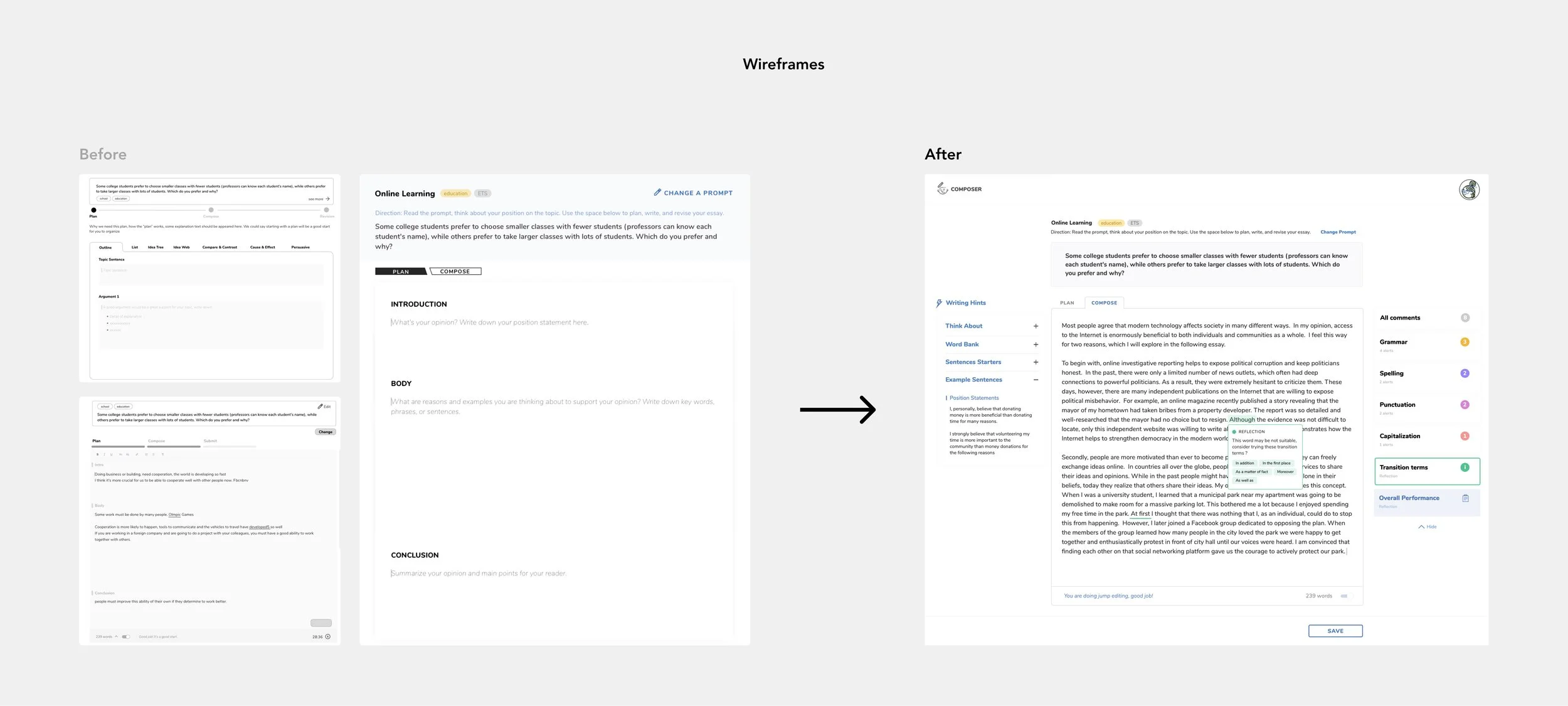

DevelopMid-Fidelity Wireframes

High-Fidelity Wireframes

Stakeholder Reviews

Design Critique

Pilot Study

Usability Testing

DeliverDevelopment Reviews

Asset-Prep

Design QA sessions

Team Testing

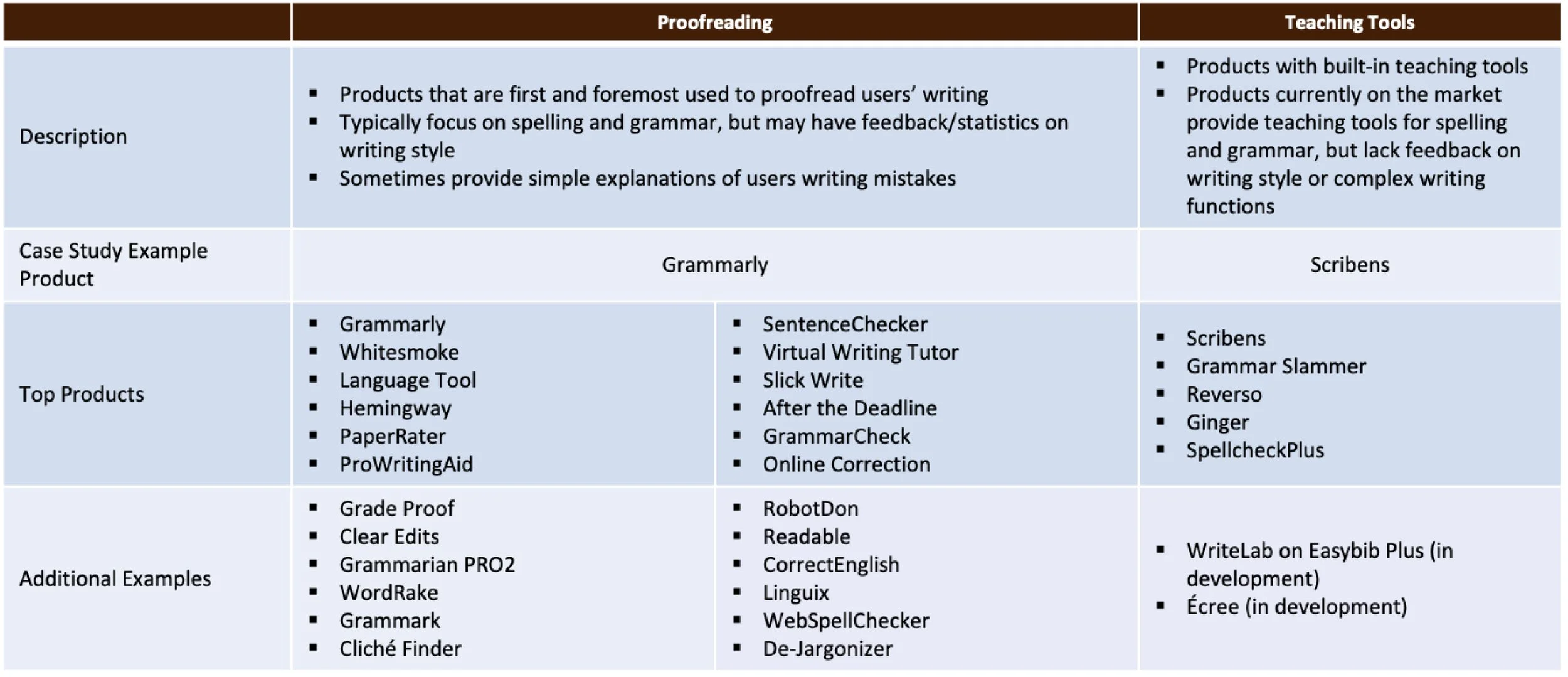

DISCOVEr - Literature ReviewI reviewed over 10 pieces of literature recommended by the research scientists in our team, conducted a competitor analysis (31 writing support products ) and also collected the previous writing research data from the other team. We found that the automated writing feedback product market is saturated with proofreading products. (To comply with my non-disclosure agreement, I have omitted and obfuscated confidential information in this case study.)

Products that function as teaching tools are less common

Products with complex capability (such as feedback on argumentative organization and use of evidence) appear to still be in developmental stage.

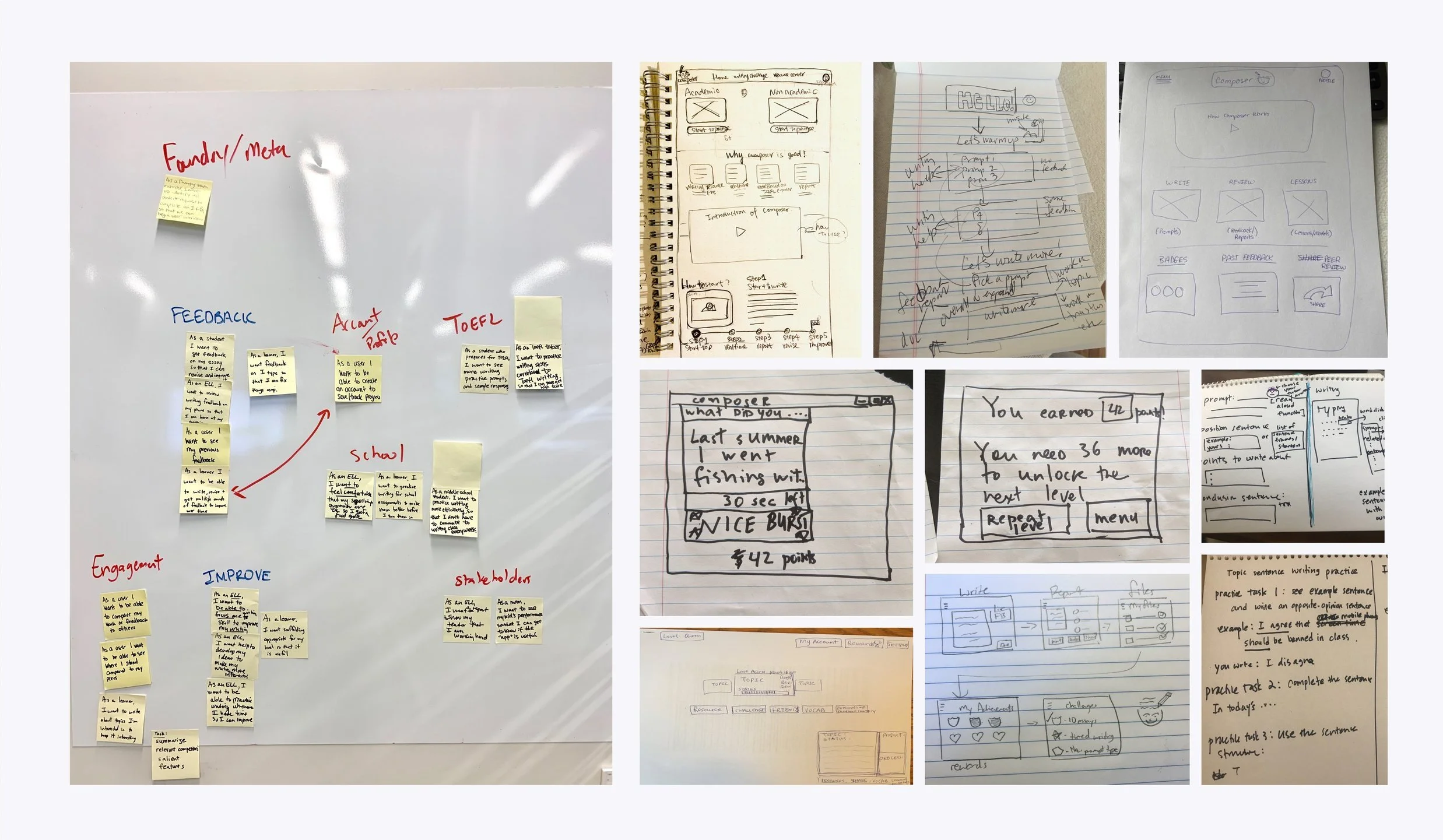

Discover - Ideation for the productI designed and conducted several ideation workshops with internal and external members of our team to:

Encourage divergent thinking from cross-functional team members.

Align team members on a shared understanding on who our users could be.

Identify the gap between what we know about users and what we do not know about them.

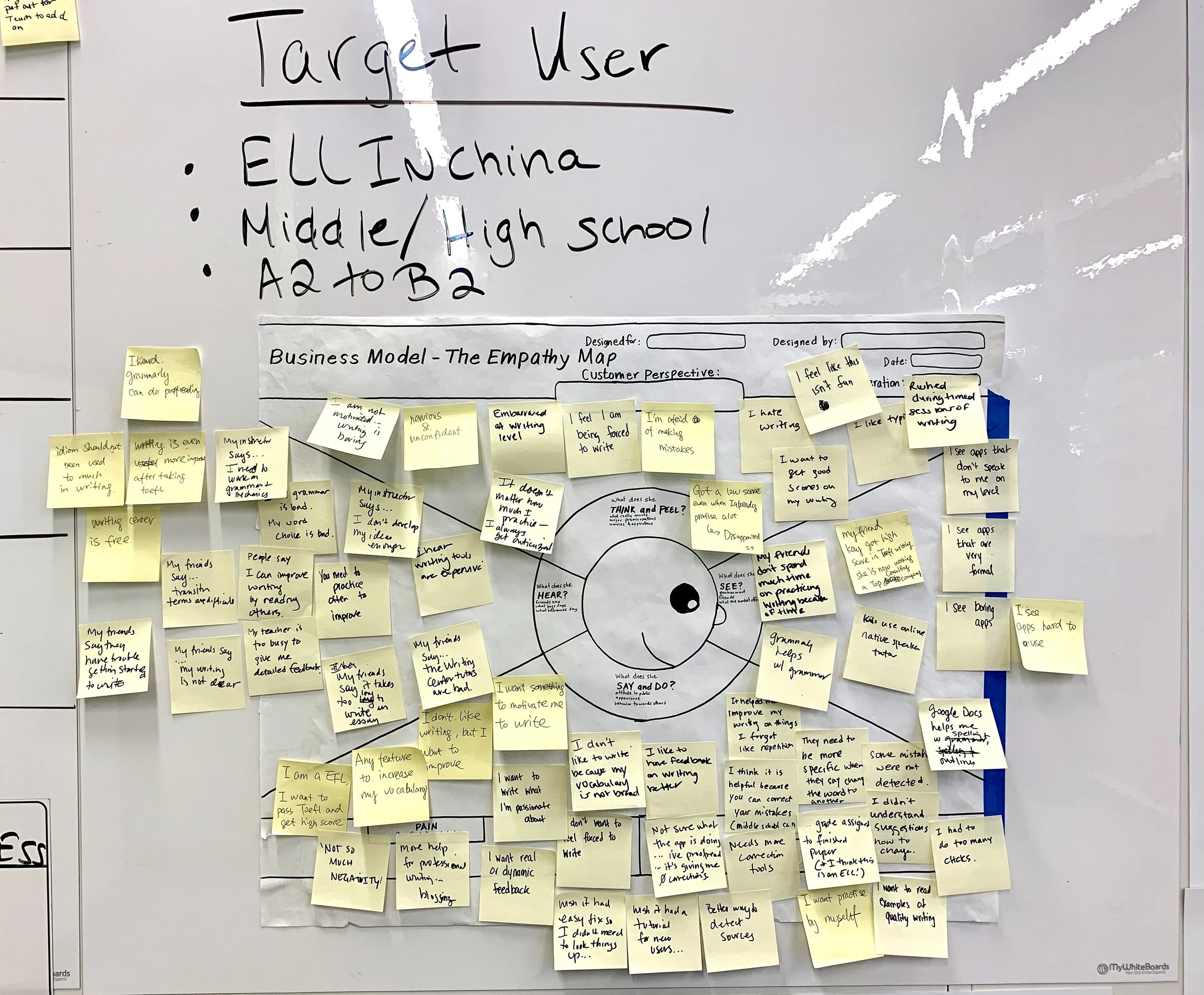

Empathy MappingAfter interviewing 5 Chinese students, 3 new graduates (Chinese but live in the US) and 3 Chinese teachers, I shared the interview notes and synthesized findings with the team before this workshop. Everyone on the team discussed their thoughts and I asked team members to do the empathy mapping individually based on these interview findings. Then we synthesized them by combining users who exhibit similar behaviors into an aggregated empathy map.

This empathy mapping session forced our team to think deeply regarding what we know and what we do not know. It also helped generate a lot of hypotheses we later tested in user engagement sessions.

User PersonaBased on the results we got from the empathy mapping session, user persona workshops are easier to conduct. The bias and disagreement have been solved a lot in the previous session. In the user persona session, I divided the team into two groups, each group will be responsible for creating 2 personas based on what we discussed in the empathy mapping session.

User JourneyThen, in order to find the pain points and most potential design opportunities we could improve the learning experience, I invited the teammates together with me to draft the user journey.

Discover - User persona & pain pointsThe barriers during the whole writing practice process. I grouped the pain points into 3 phases: preparing for writing, writing in progress, and after the writing task is done. Based on these findings, I listed three design goals to guide the upcoming product design directions.

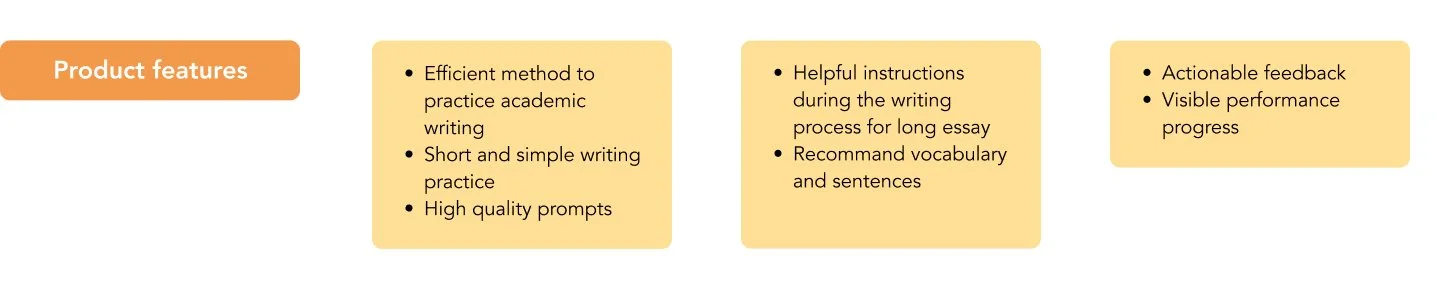

DEFINeProduct Feature Selection and Prioritization

With the plentiful data we got from the previous sessions, I drafted and grouped possible features and solutions of Composer and shared them with the team to discuss.

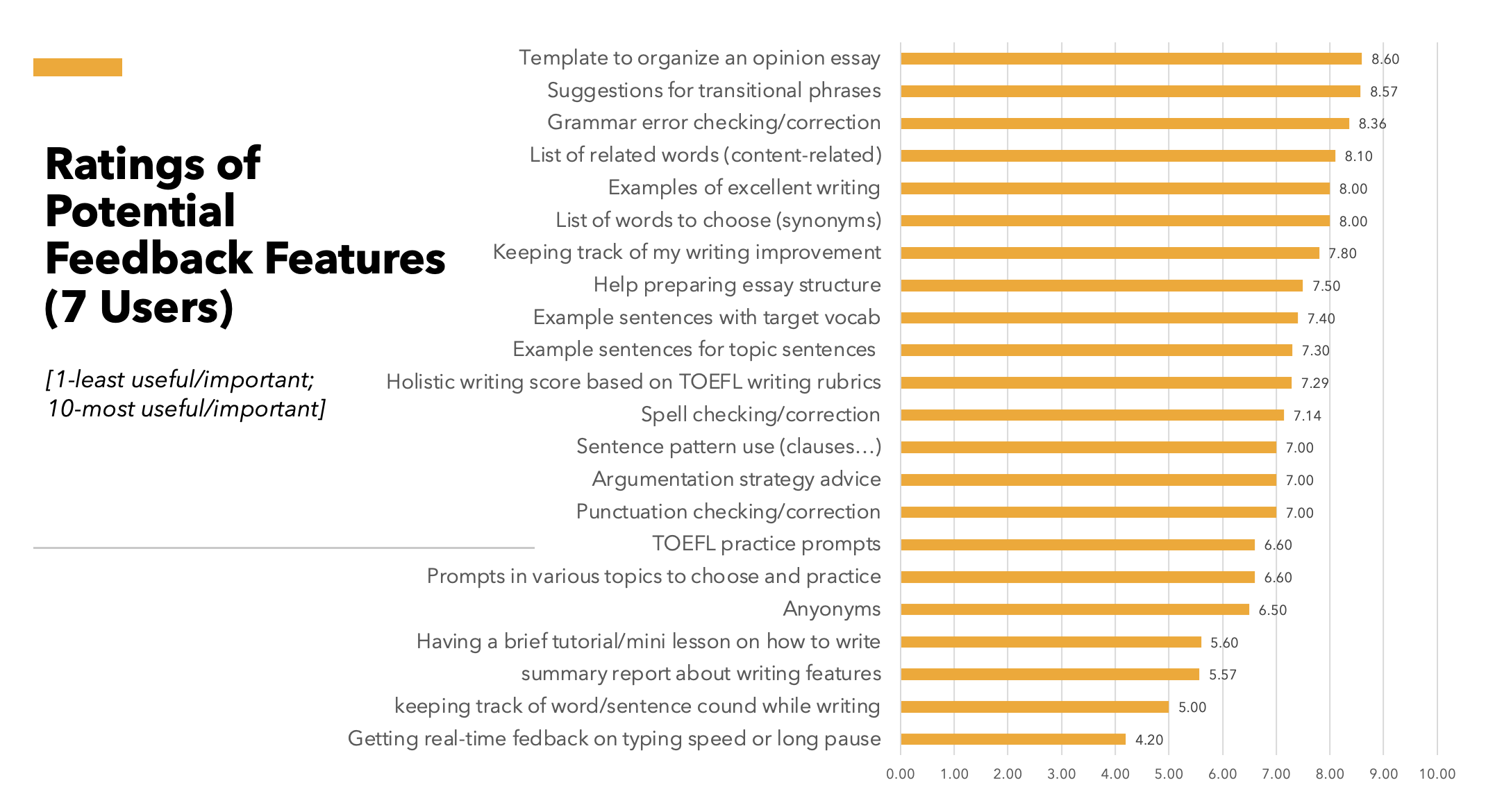

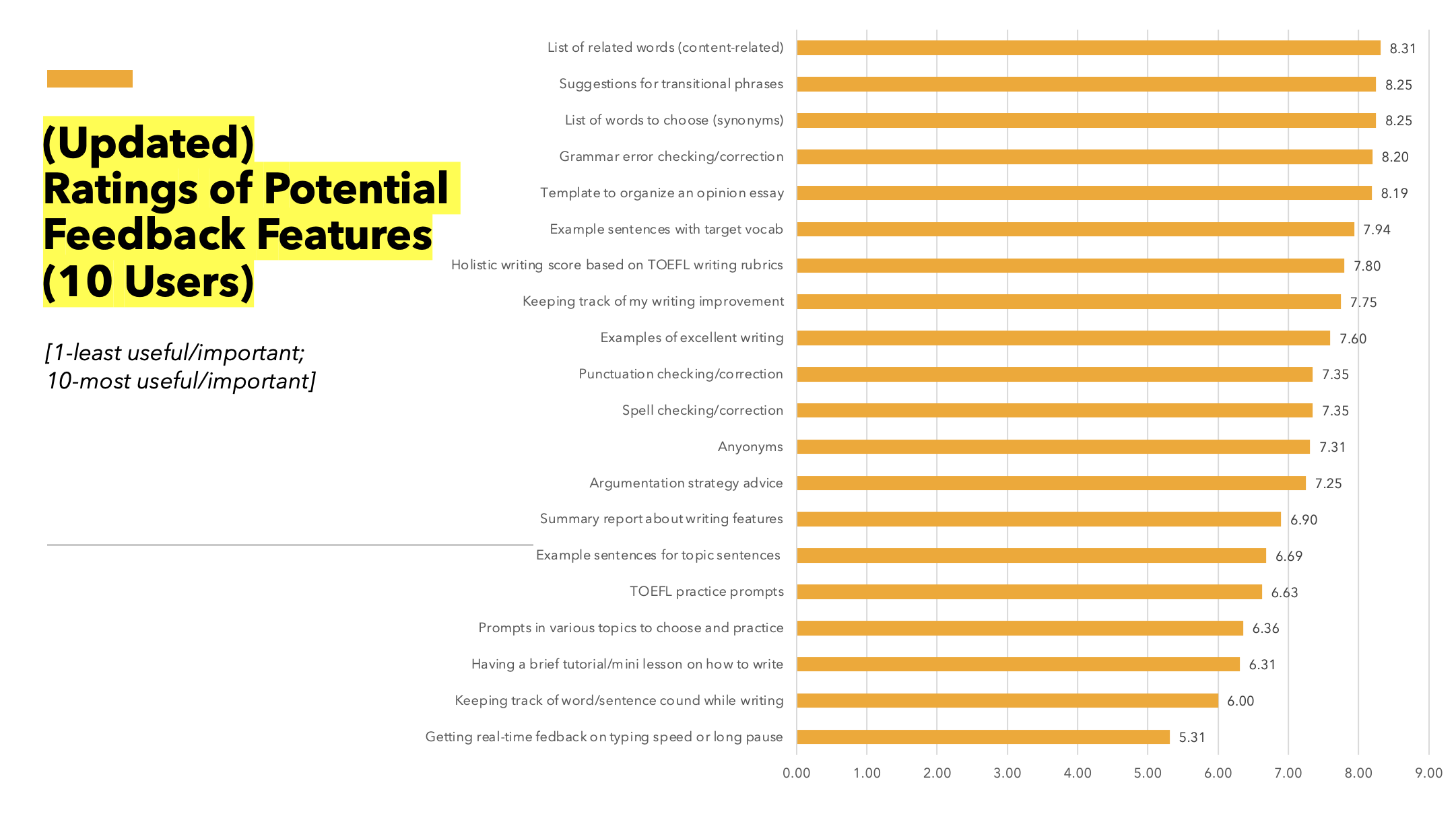

I designed a “Buy-a-Feature” activity to elicit over 25 potential product features that we could work on. I collected the “Features Cards” with virtual price tags, and asked the team to spend “money” to collaboratively buy the features. We finally got a list of the prioritized features.

To invite end users to co-design with us, we did the same activity with the students and teachers we interviewed in the discovery session virtually.

The insights collected from our team, stakeholders, and external users were used to inform product feature selection and prioritization.

Concept Testing & Team Discussion

Then I drafted some low-fidelity sketches with the selected ideas and showed them to the users and learned the mental model from their feedback. In order to validate the user needs with foundational research and figure out what features are valuable to build, I conducted some group meetings with research scientists and research engineers ideating on how to present scaffolding and feedback to guides users to form a correct understanding of their learning progress.

With these effort, I synthesized the insights we collected from the team and users and listed some core features.

Participatory Design Workshop

Also, I led a participatory design session and asked the team members to draw some initial ideas.

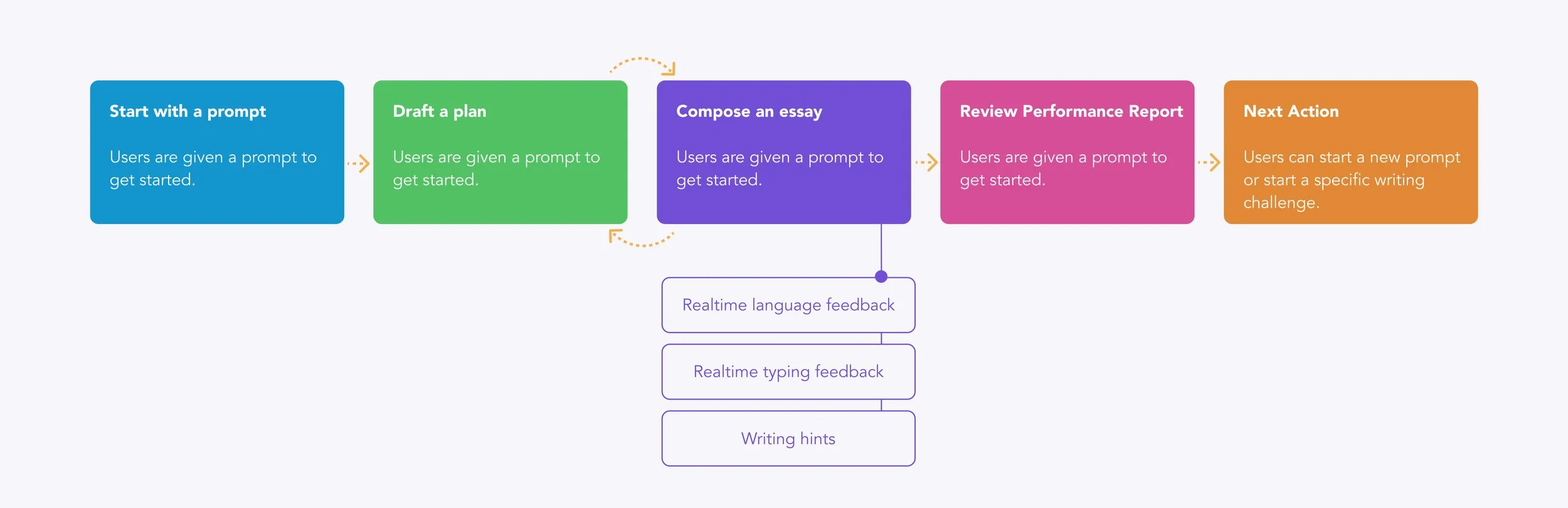

DEFINE - The ResultsWith these insights and suggestions from learning scientists, I designed the first version core loop flow and finalize it with several rounds of design iterations. The design iteration is a challenging task, with a lot of effort I changed the original loop and finalize it.

The challenge 1

Design an intuitive writing flow to help users learn to write more efficiently

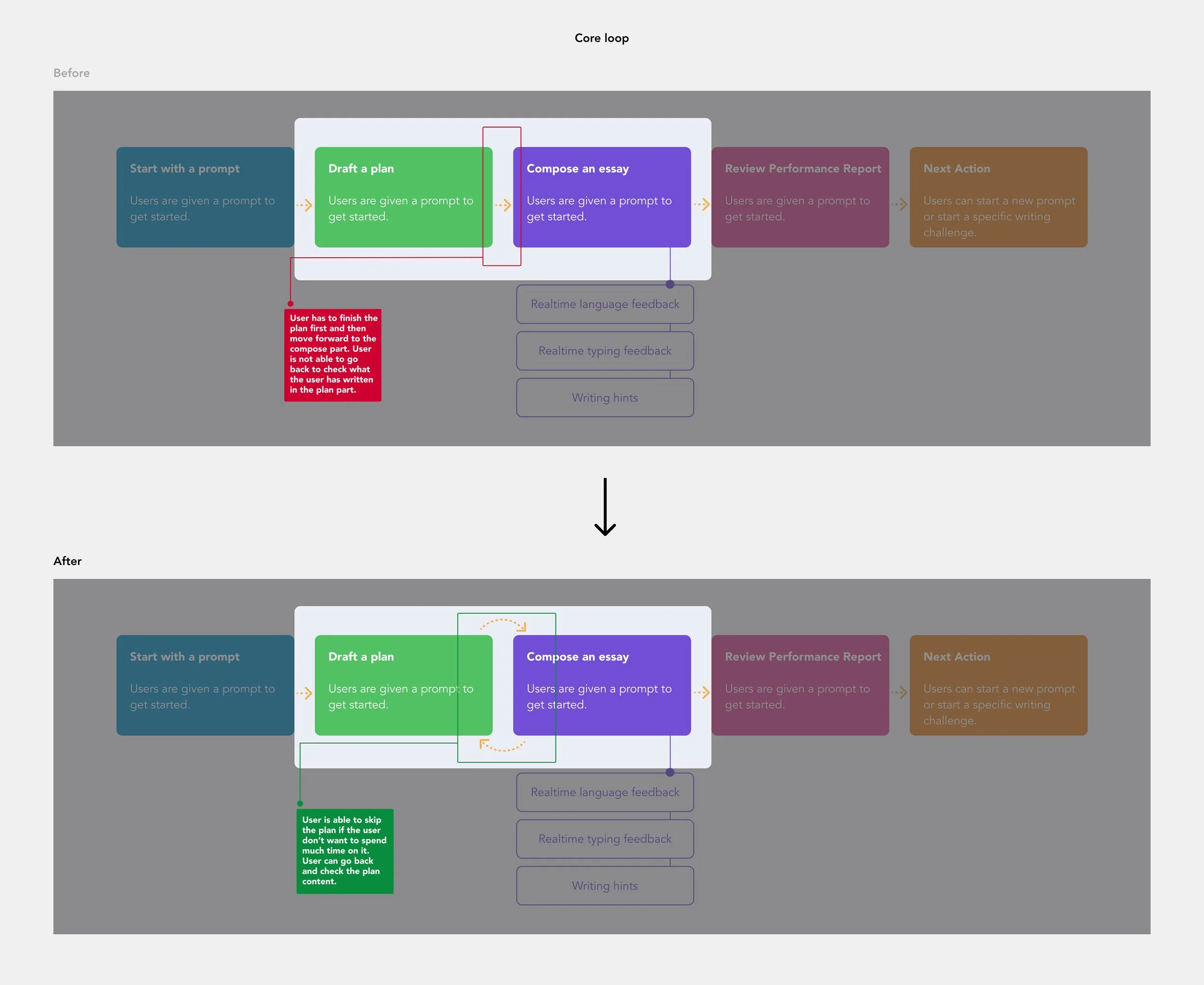

The problem - the original core loop may not be the best choiceI understand why research scientists want to push the users to draft a plan first, but from product design perspective, I have some concerns:

If the user didn’t want to draft a plan, will this flow hurt his/her using experience? Will the user just exit and never come back?

If the user forgot the plan content and want to check again, the current flow didn’t work.

How might we encourage the user to try the plan feature via a seamless design?

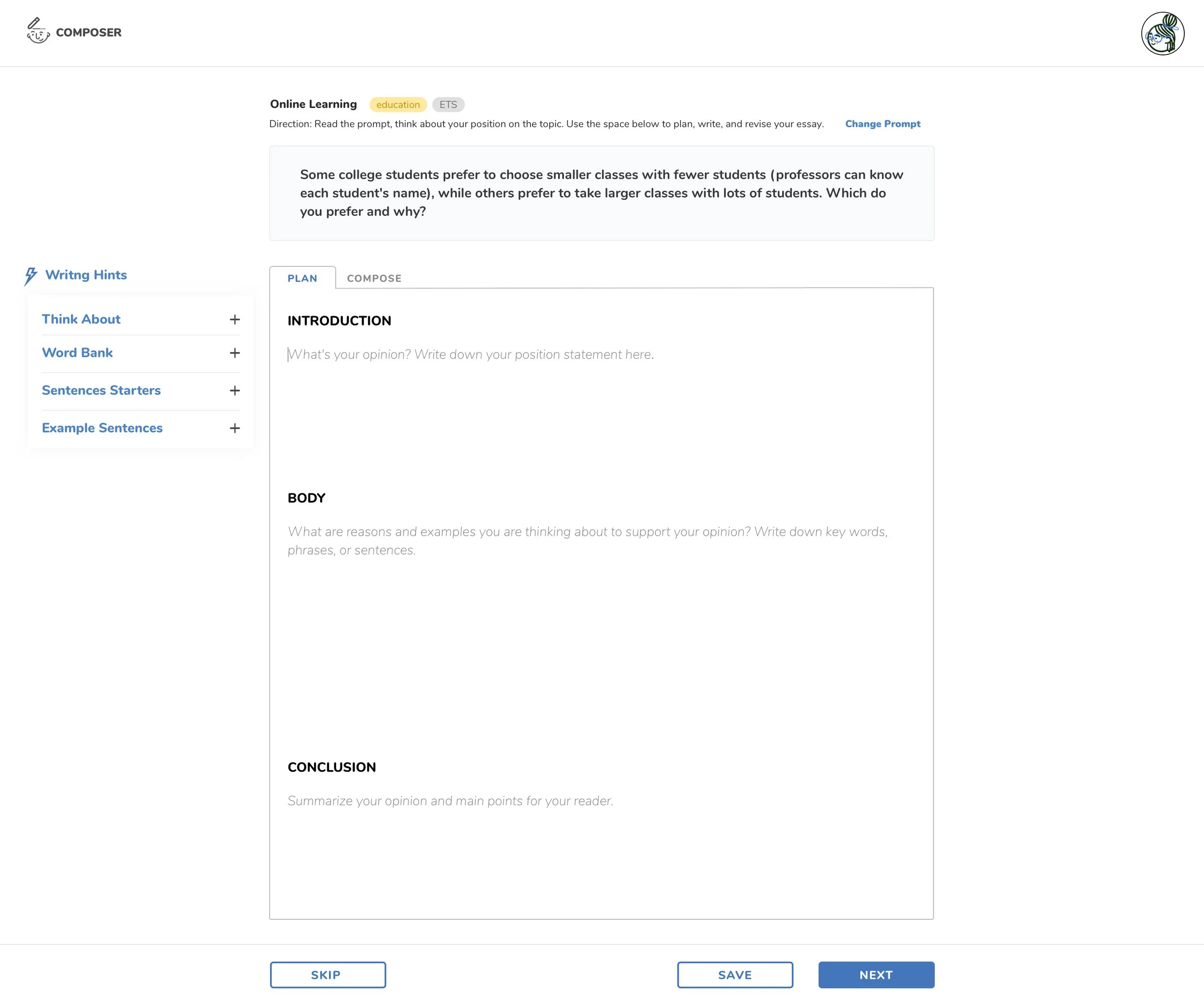

When I designed the core loop, the controversy was the process from plan to compose. Based on the foundational research and insights from learning scientists, we reached an agreement that we need to design a “plan” session in the writing process, we believe this be valuable and helpful for users.

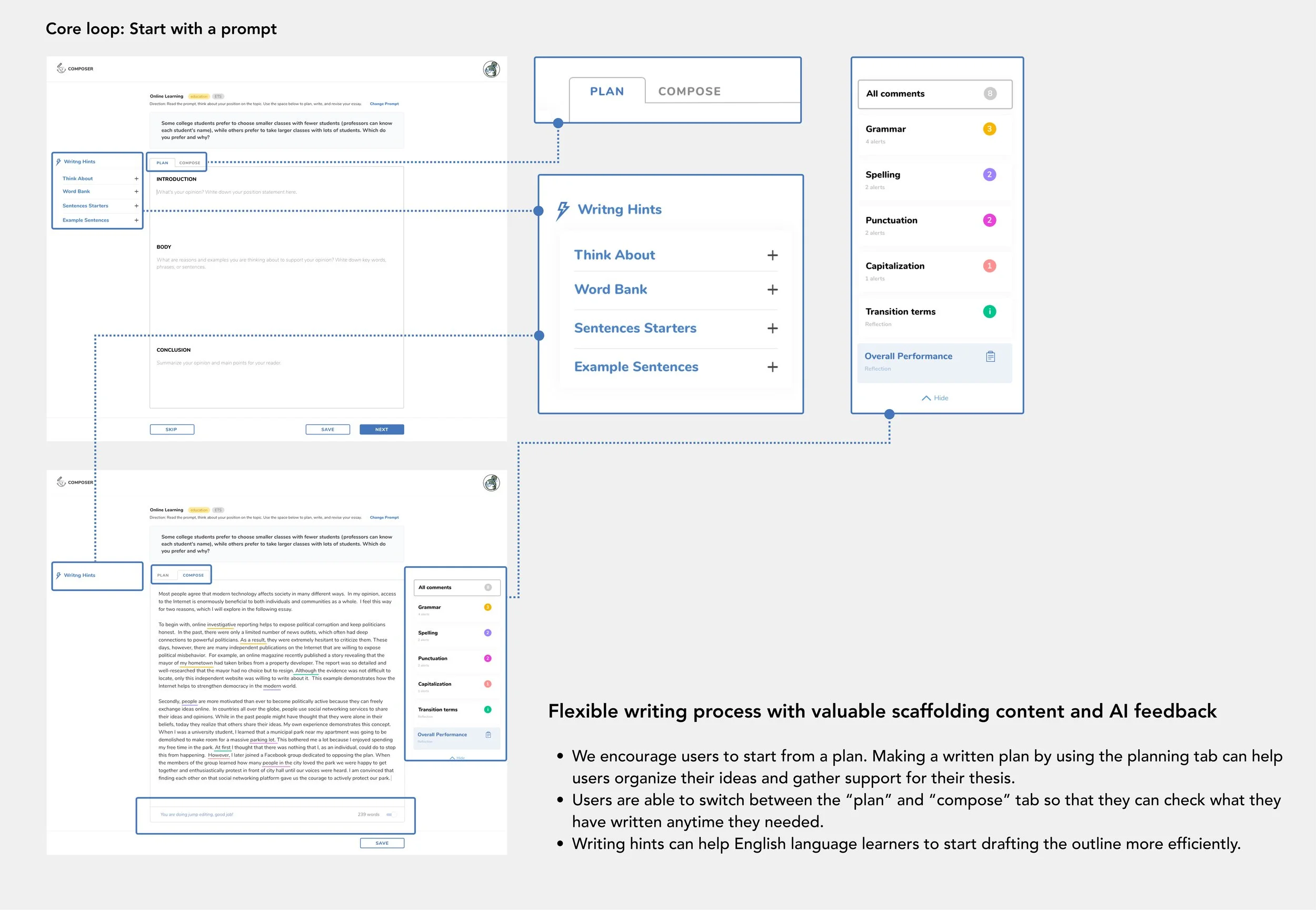

Good writers often make plans before they start writing. Sometimes they just think about their ideas, sometimes they discuss their ideas with someone else, and sometimes they write down their ideas. Making a written plan by using the planning tab can help users organize their ideas and gather support for their thesis.

ETS has some previous prototypes and they are designed for foundational research, in these prototypes, users are asked to make a plan first within 10 minutes and then move forward to compose part. The users can not come back and they will be able to check the plan again if they didn’t copy what they have written.

The approachIn order to validate my hypothesis, I designed some different ideas and ran concept testing with users, I also prepared some interview questions to understand more how did the users practice English writing in a physical scenario. After that, I redesigned the core loop flow and also redesigned the wireframes.

Exploring different design solutions and validate them with users

With these insights, I redesigned the core loop flow and the wireframes. The new loop provides a more flexible way:

Keep the “plan” function and the order from plan to compose, all users will find “plan” in the first step before compose.

Users are able to switch between “plan” and “compose” tab so that they can check what they written anytime they needed.

The “plan” tab can be skipped.

The solution

The challenge 2

Translate the complex AI capabilities into actionable feedback

The problem - Get the backstoryIn order to align our team on a shared understanding of our current resources, business needs, and objectives. I collected requests, expectations, and guardrails for what we have and what directions we are moving towards.

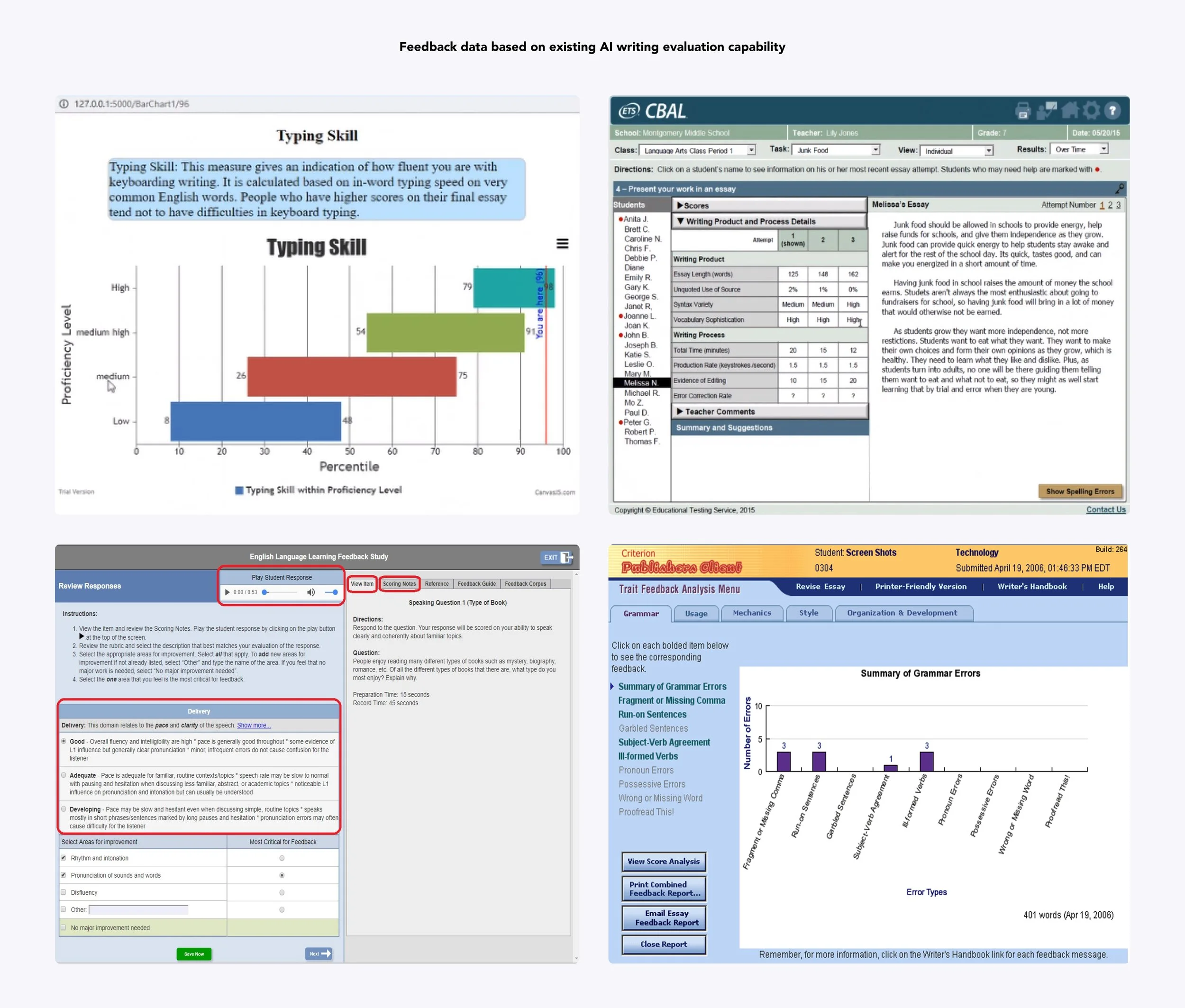

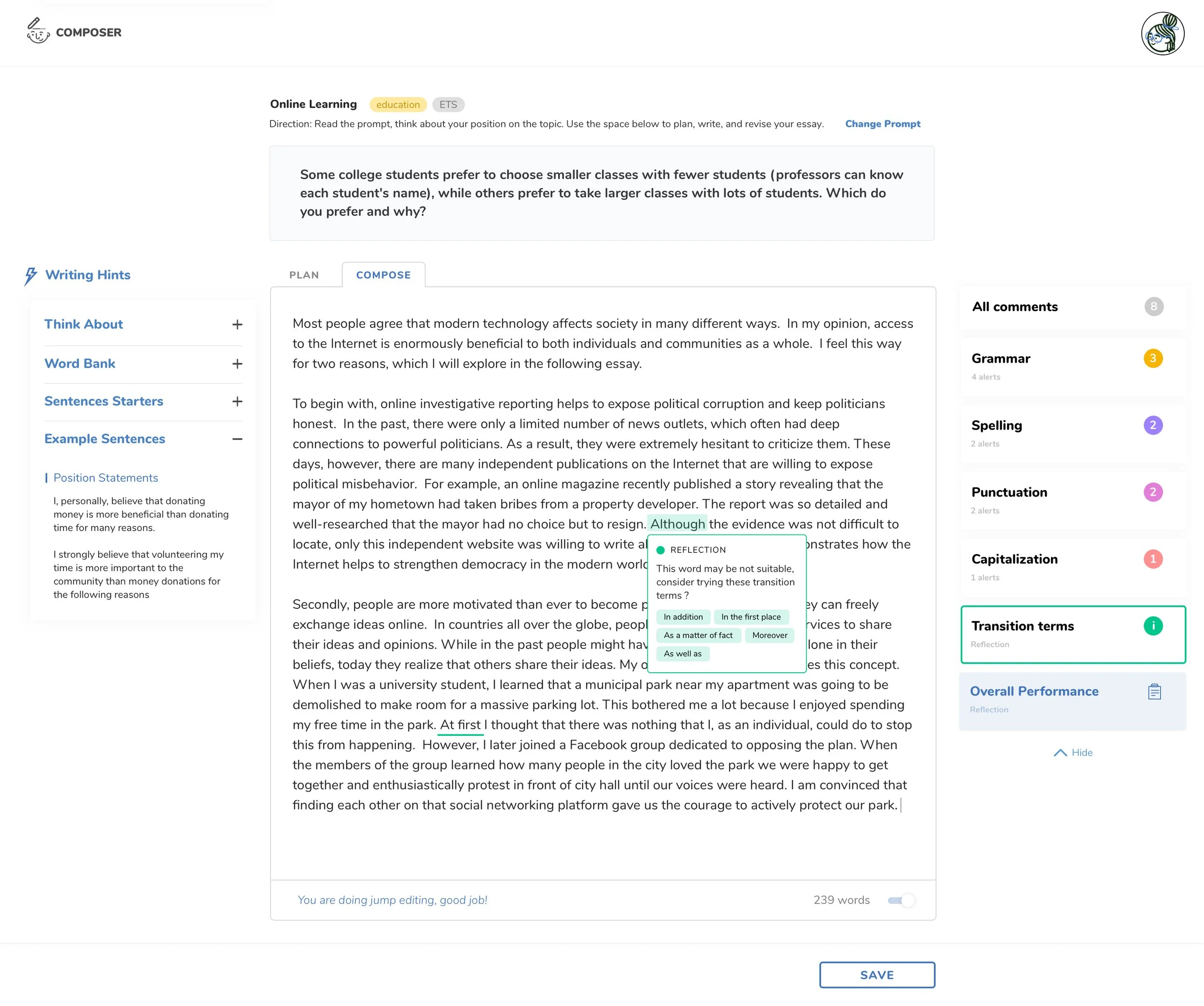

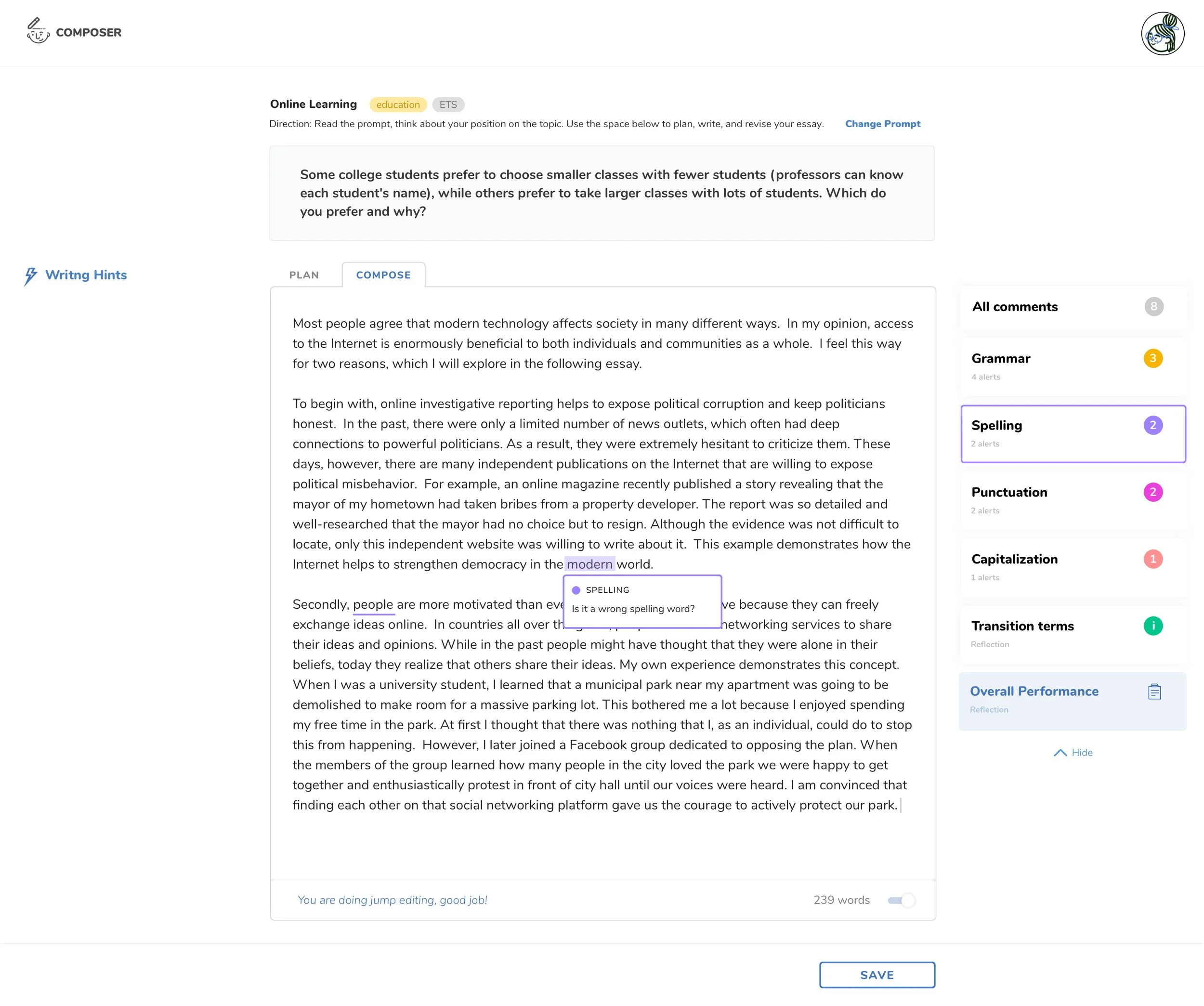

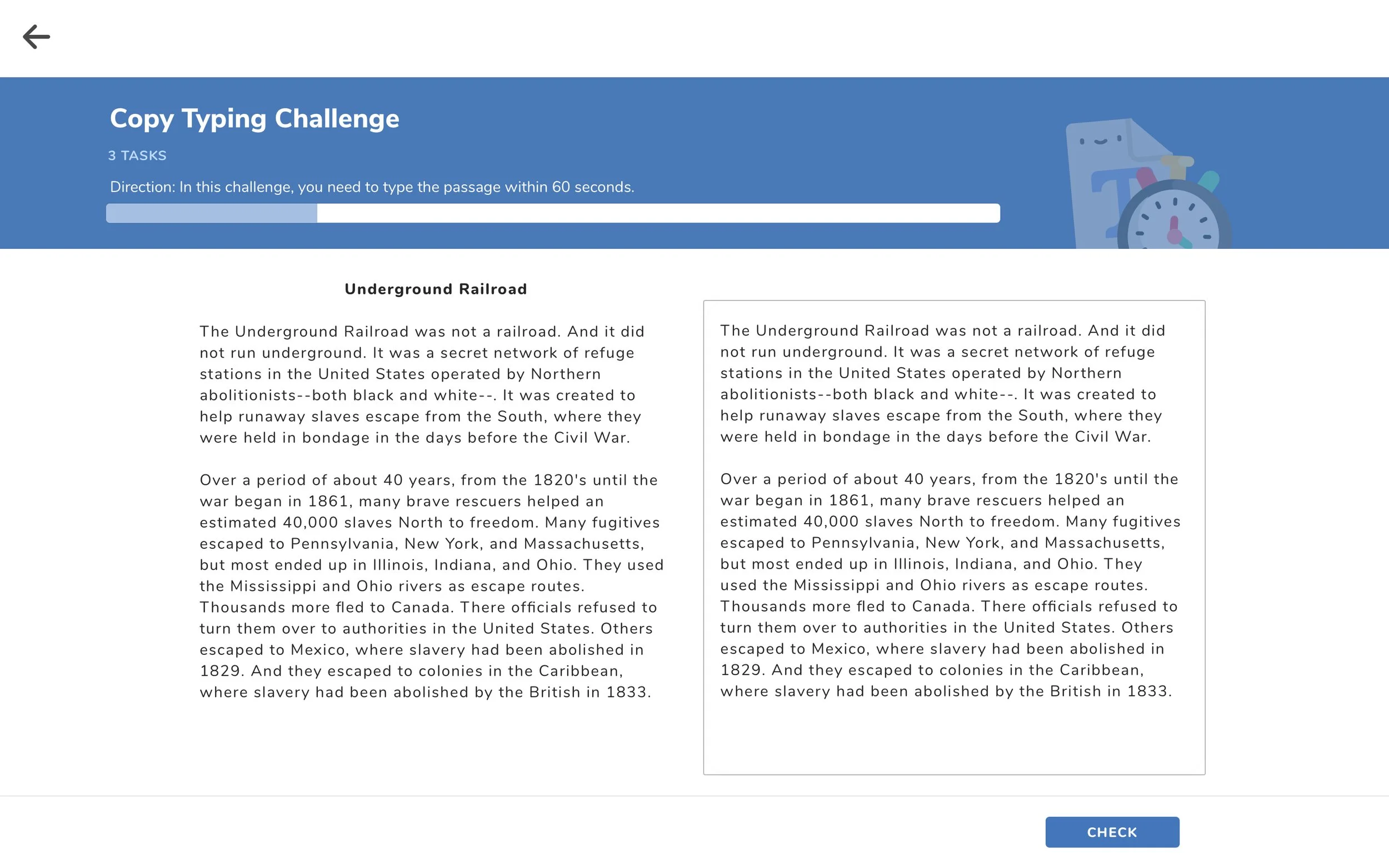

We were asked to build a prototype to embody this technical capability in a user-friendly way. Our AI writing evaluation capability is capable of producing some automated feedback like this (see the images below). It can generate different measures. However, it is not something that is readily available by users. Our team’s experiment could be used to inform what are the promising features NLP lab could work on in the future. We can also build prototypes to collect data for them.

The approachCard Sorting

We started with numbers, constructs, variables, feature output. In order to understand users’ mental model, I selected the potential feedback features with the team and the conduct several rounds of interview to test with users.

I designed an open card sorting task for users and asked them to talk about how they process feedback and what they need most in their English writing and ask the users to rate each of them. Finally, I collaborated with the team to develop feedback content and criteria based on content and technical capabilities.

Determine the Criteria

After we knew what feedback to provide and present to learners, the next thing was to determine how to convert the raw values into some actionable feedback. I worked with research scientists and research engineers to determine the criteria to differentiate good writings from bad writings. Then I asked the scientists to provide some user-friendly language to help users interpret the results. (To comply with my non-disclosure agreement, I have omitted and obfuscated confidential information in this case study.)

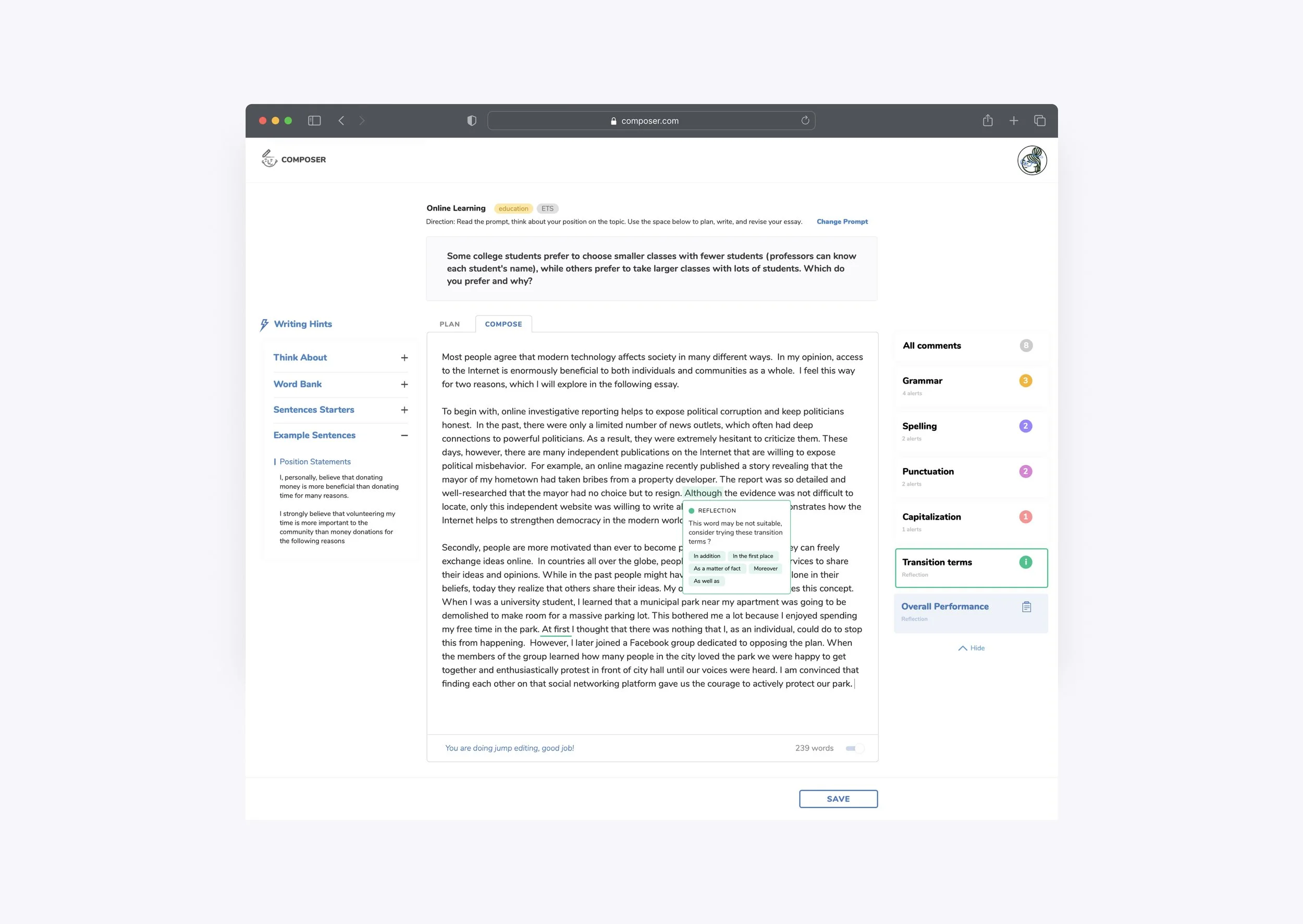

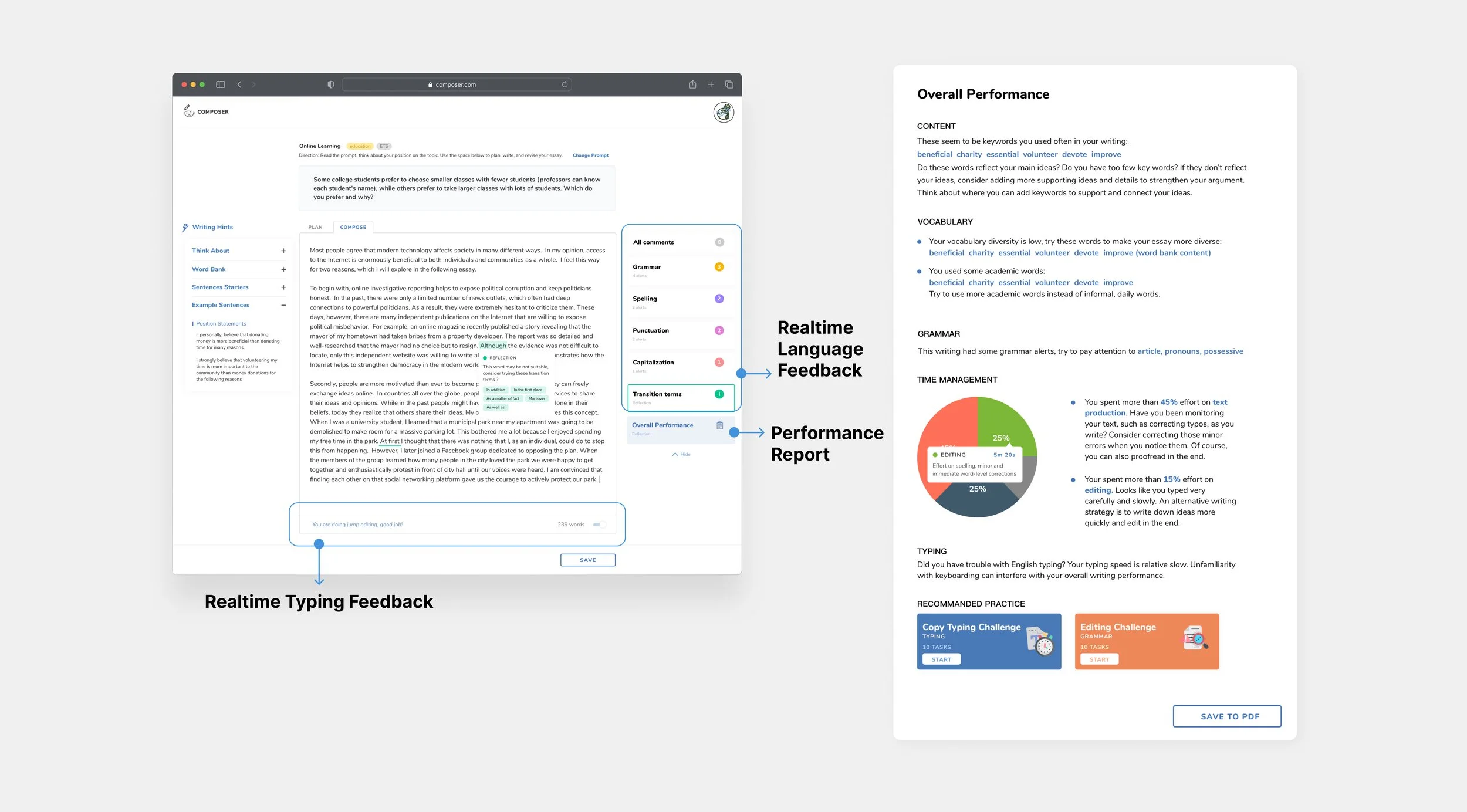

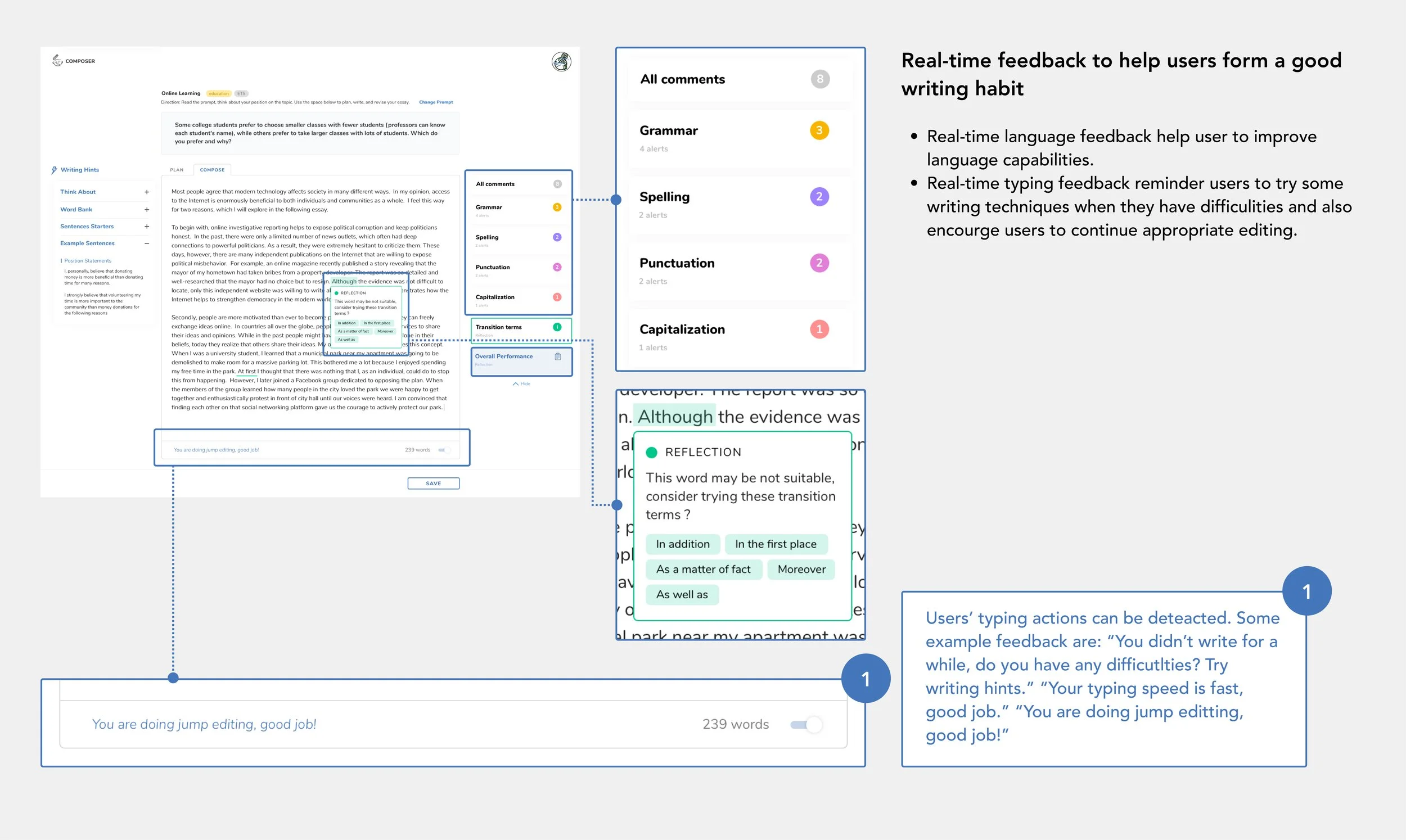

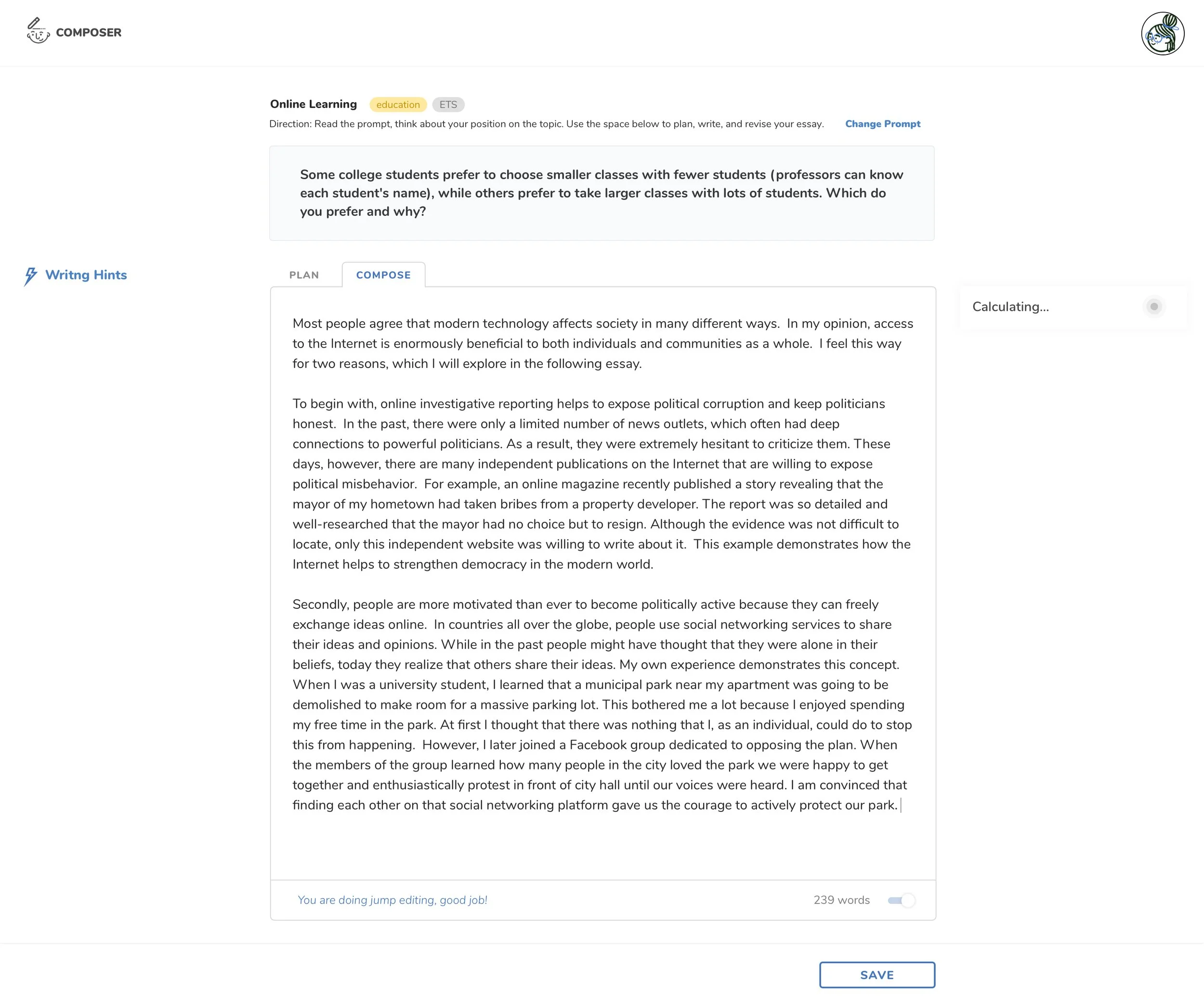

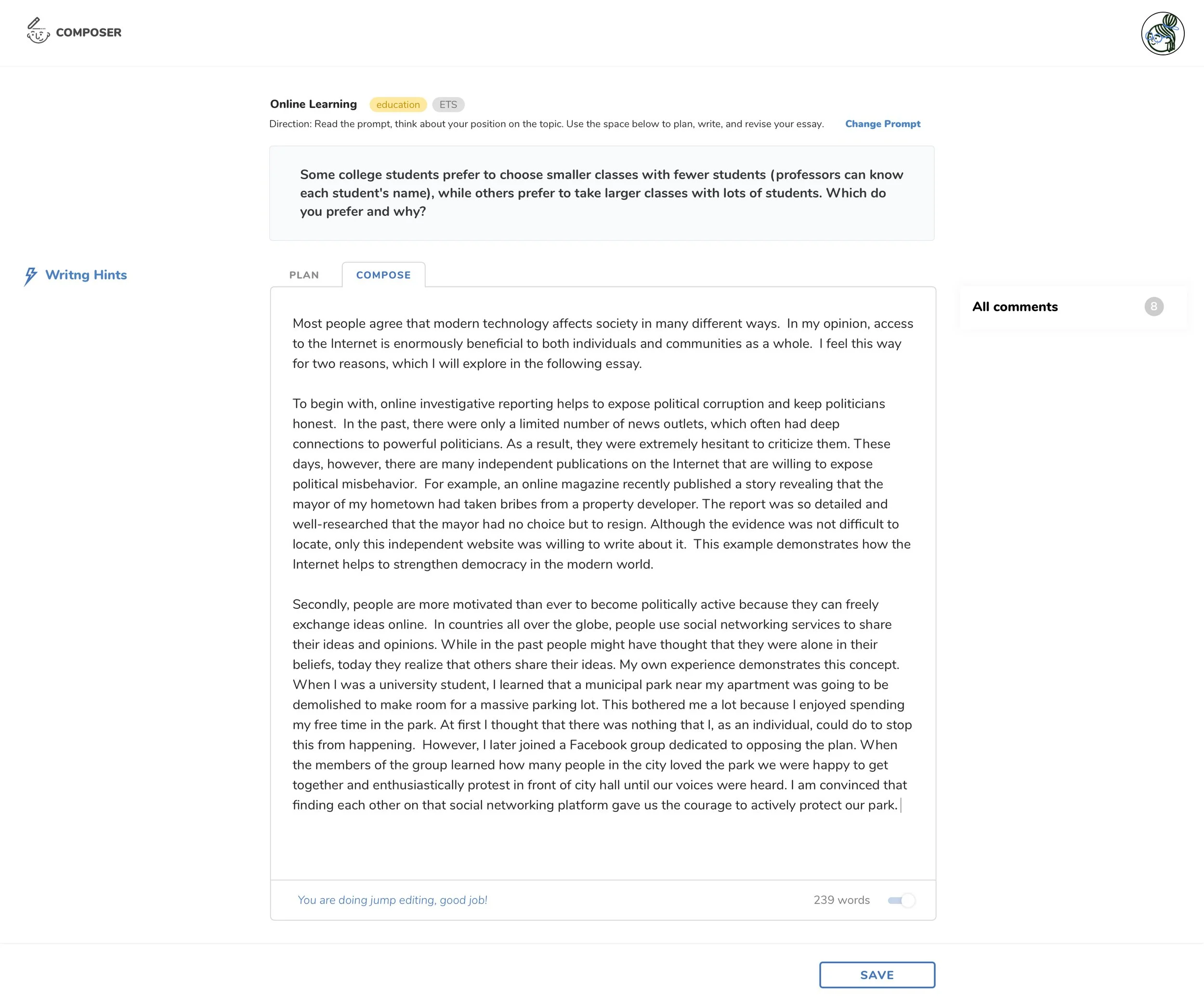

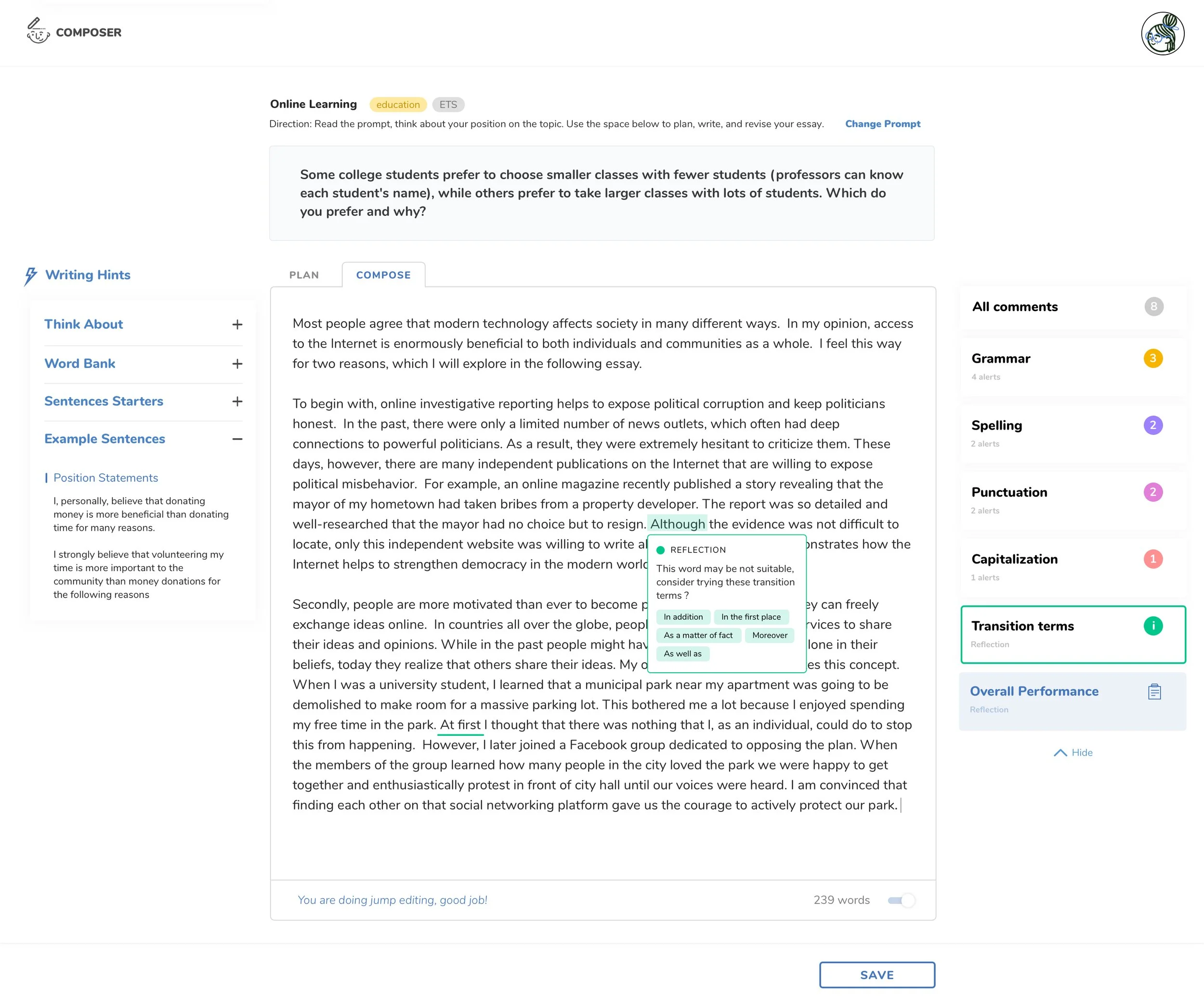

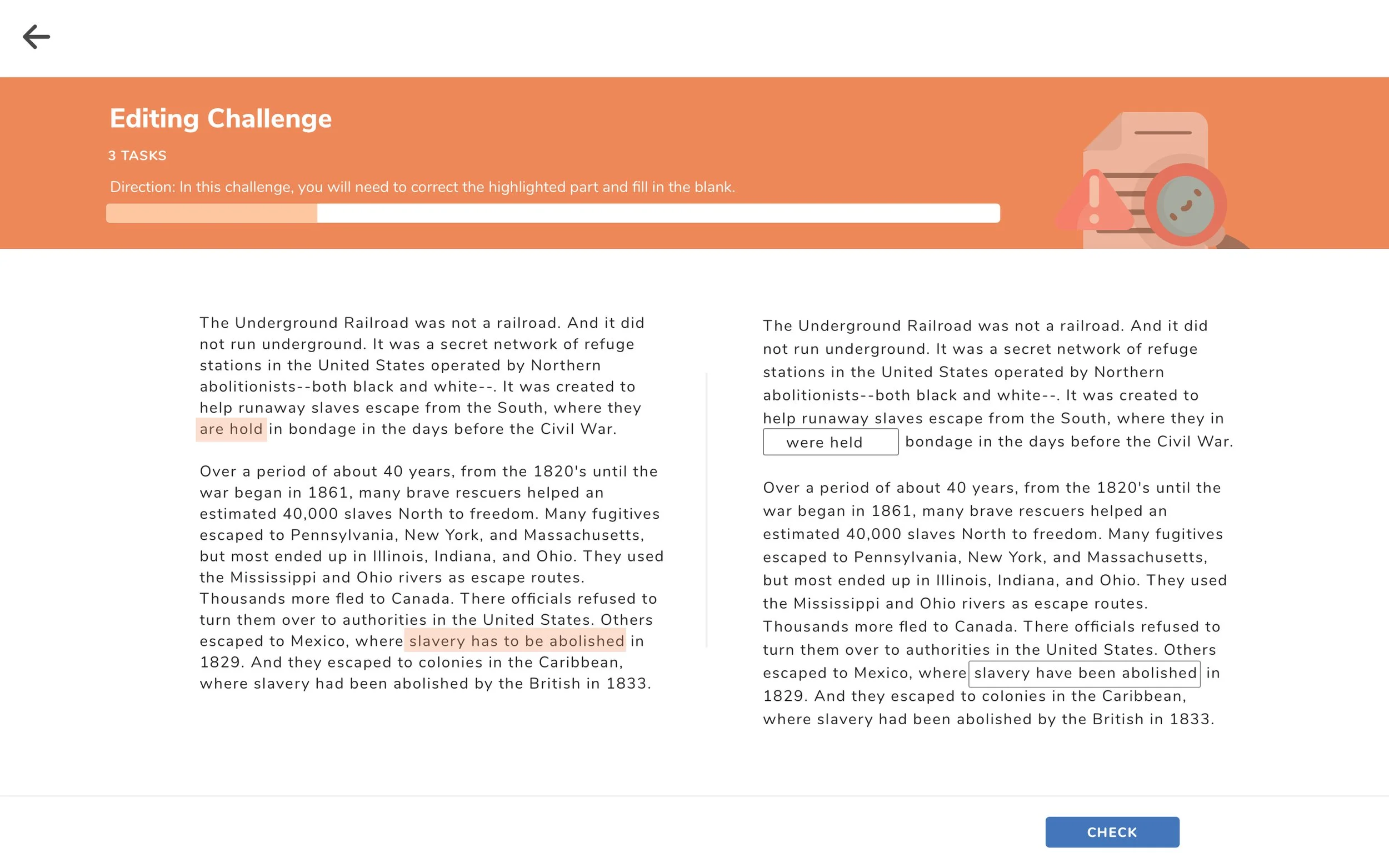

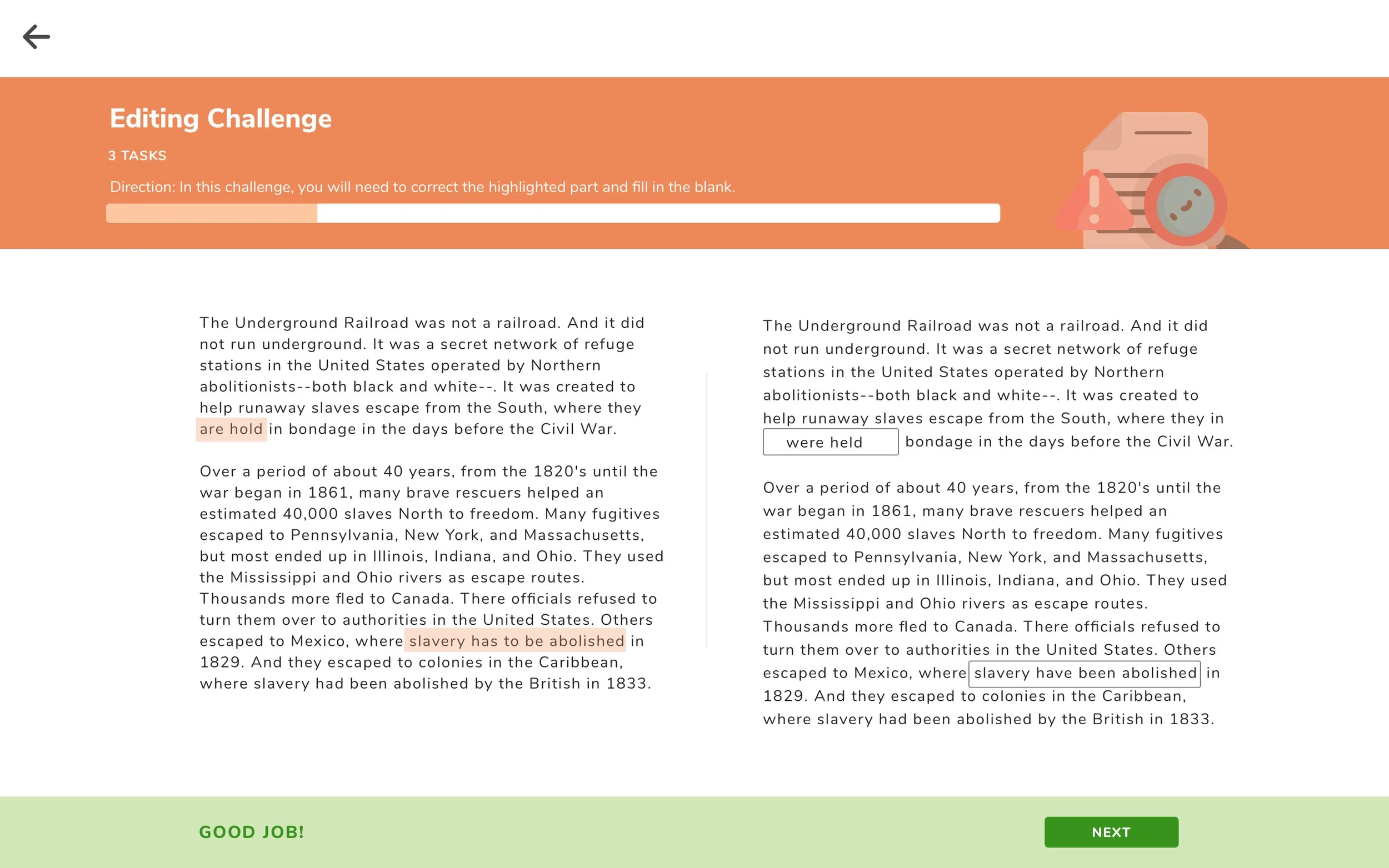

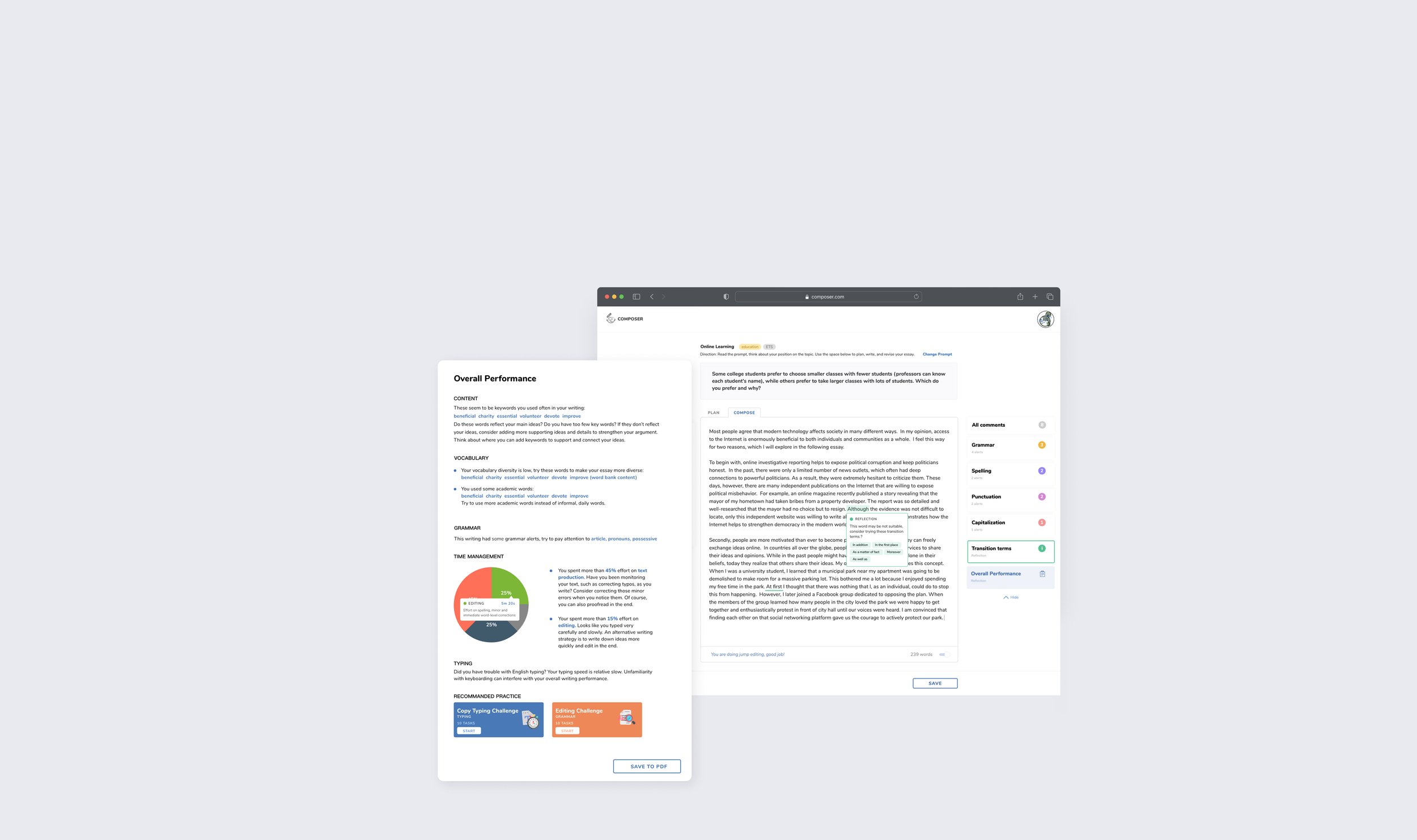

With these insights, we determined how to convert “raw value” to “feedback“ and I divided the feedback into two categories: real-time (typing and language) feedback and overall performance report.

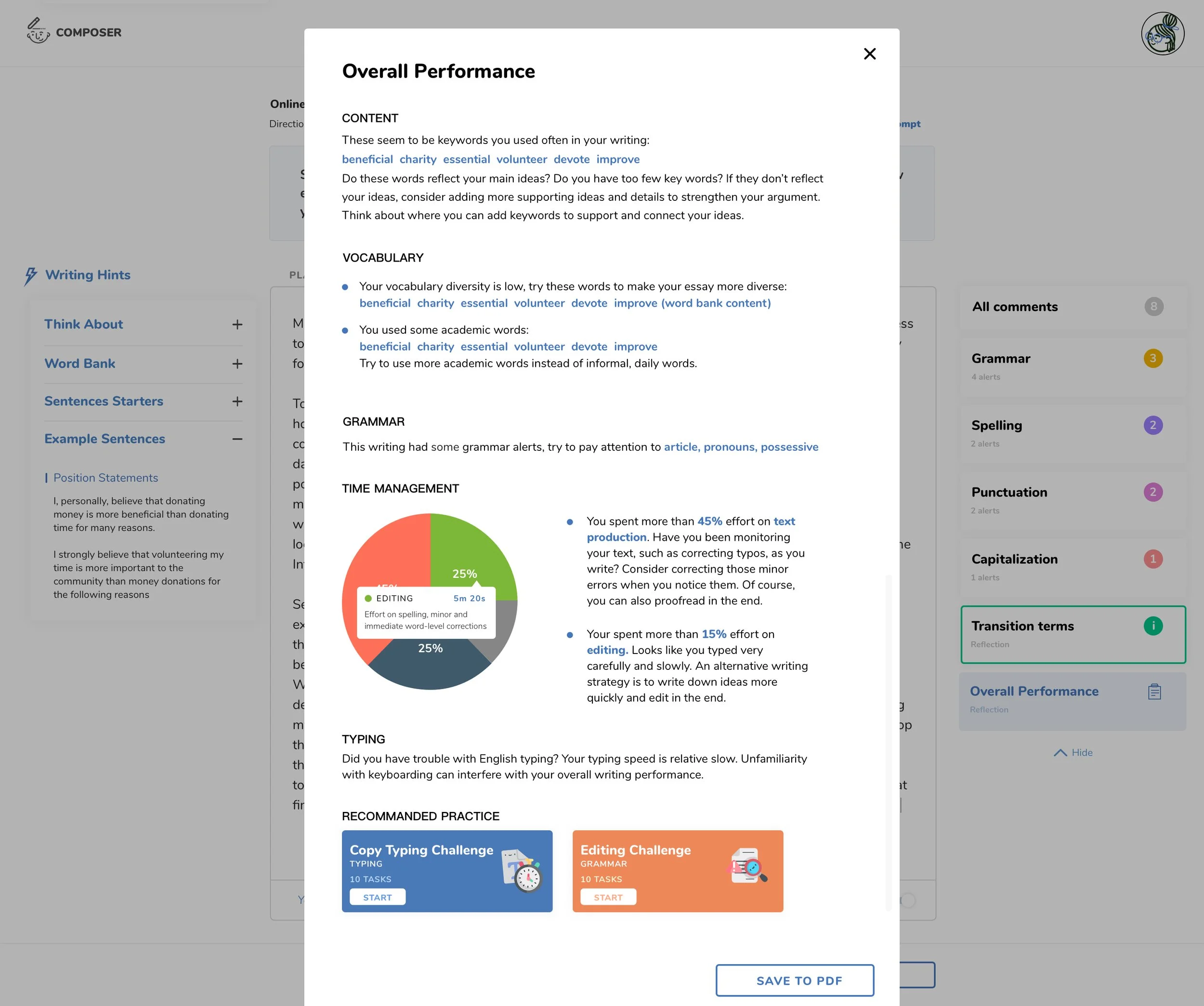

Realtime feedback can be seen when the user’s writing is in progress, the overall performance report is a summary of the users’ writing performance for the prompt.

The solution

Real-time feedback

Overall performance report

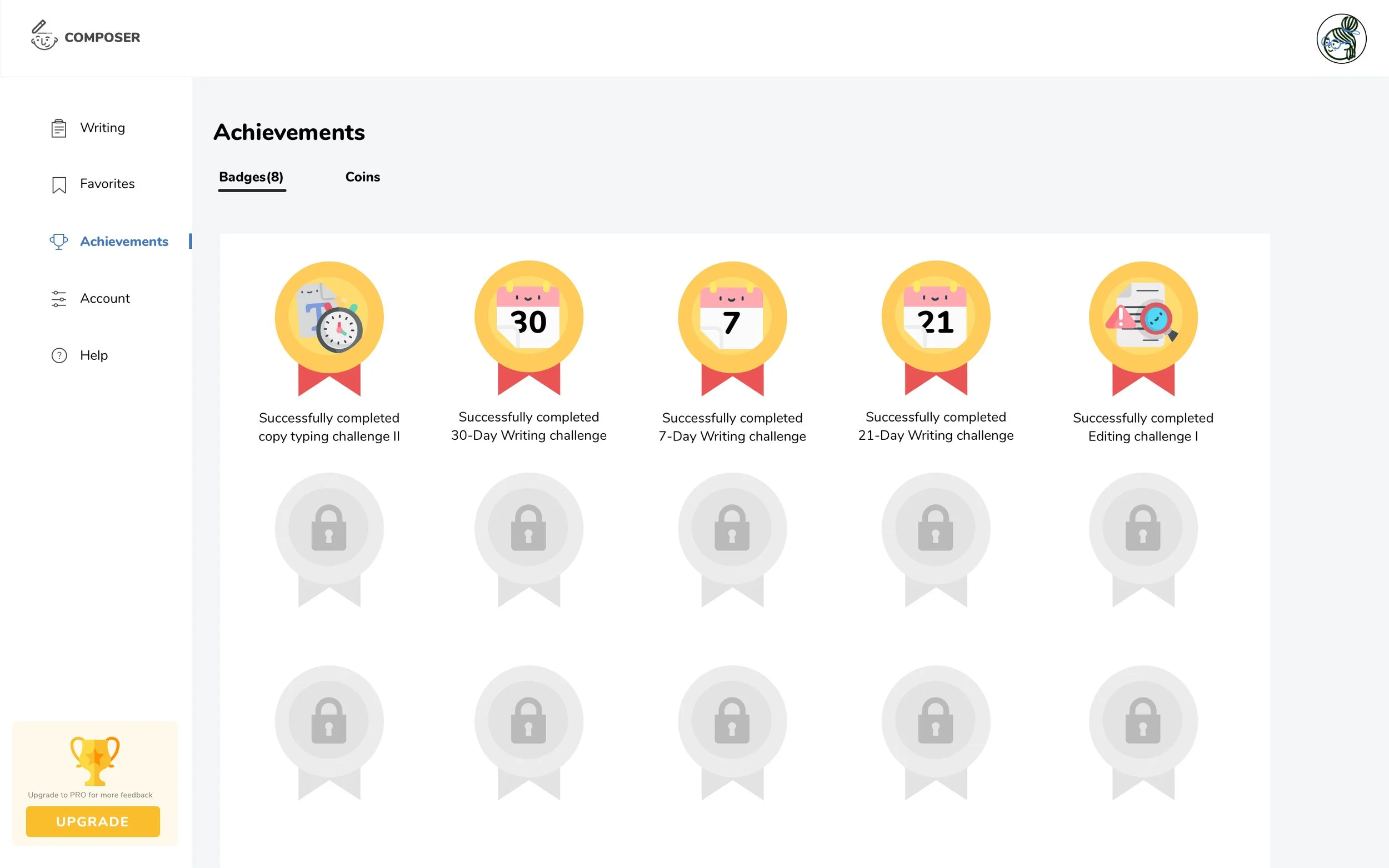

Testing & IterationOur team decided that the AI-based learning loop should be the core of our MVP. As a group, we felt that since the concept testing wireframes tested so well, they served well as our low-fidelity prototypes. We decided to move into mid-fidelity prototypes and ran usability testing. Based on the usability testing feedback and the team’s input, I iterated the design and moved it into high-fidelity wireframes.

Results

By given our testing result, we reached 72 SUS score. More than that, we also found some design opportunities for the future and what to improve.

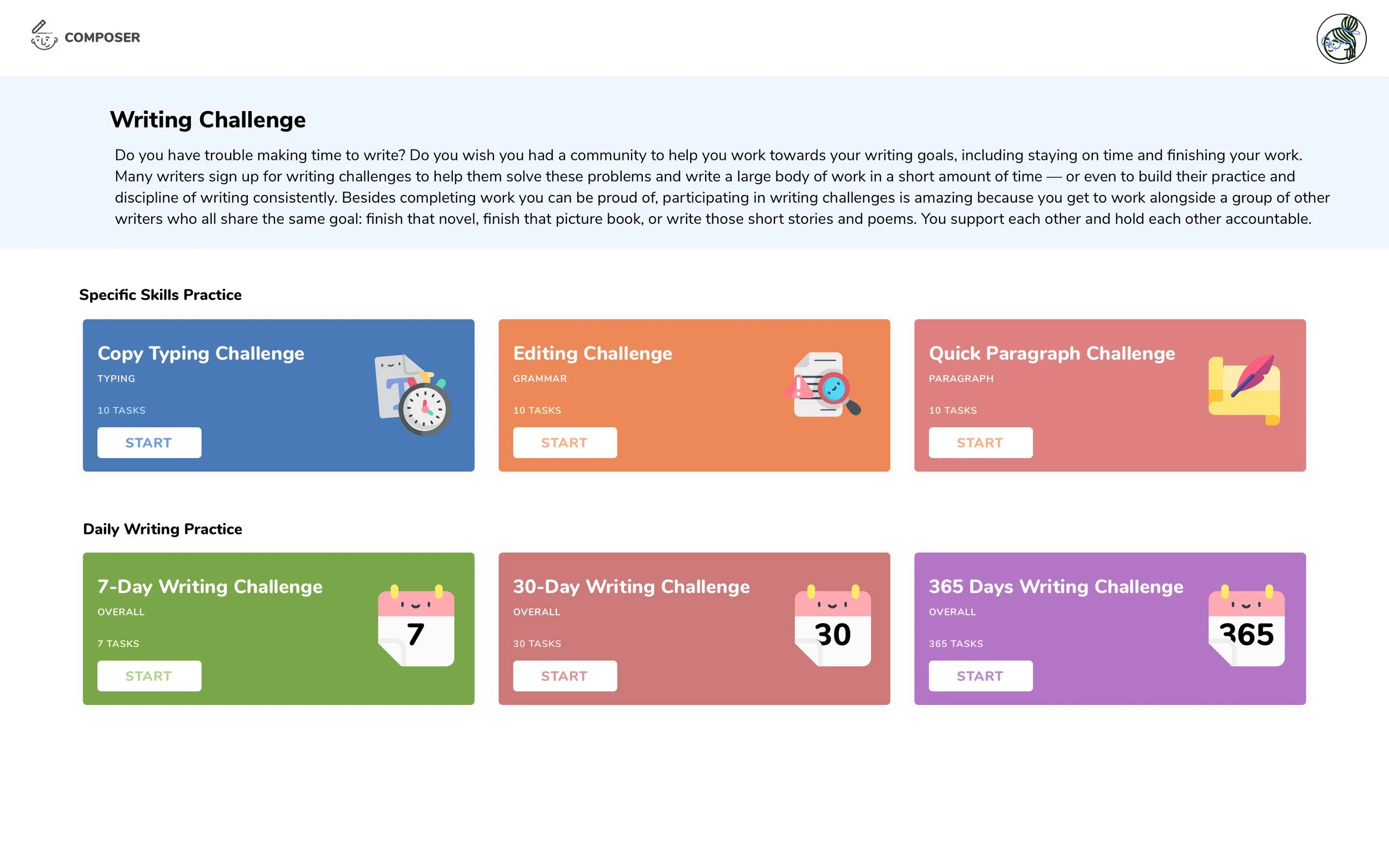

More content. Users love rich feedback we could provide now and pointed more directions for us to continue building more feedback categories and more short writing challenges focusing on specific skills, e.g. Using some words to make a sentence, reading practice, sample essay, etc.

New use cases. From talking with users, we found out new use cases for future Composer, e.g. classroom user by teachers.

Redesign the prompt title area. Most of the testers didn’t notice the prompt at first glance, it took them a few seconds to find where is the prompt.

Simplifying the navigation and focusing on core features only. There are too many tabs on navigation that made our testers lost their focus.

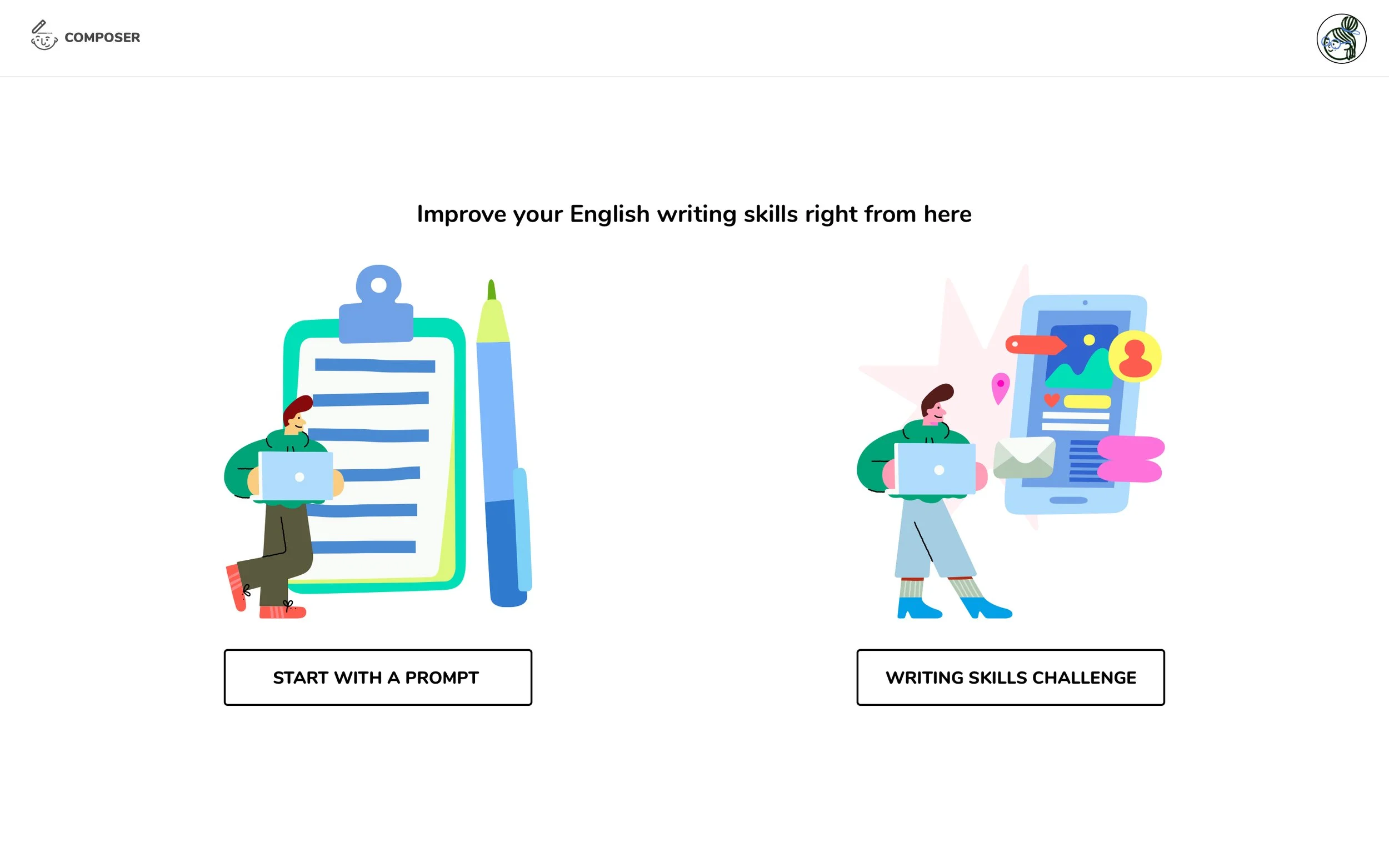

Let’s meet Lin

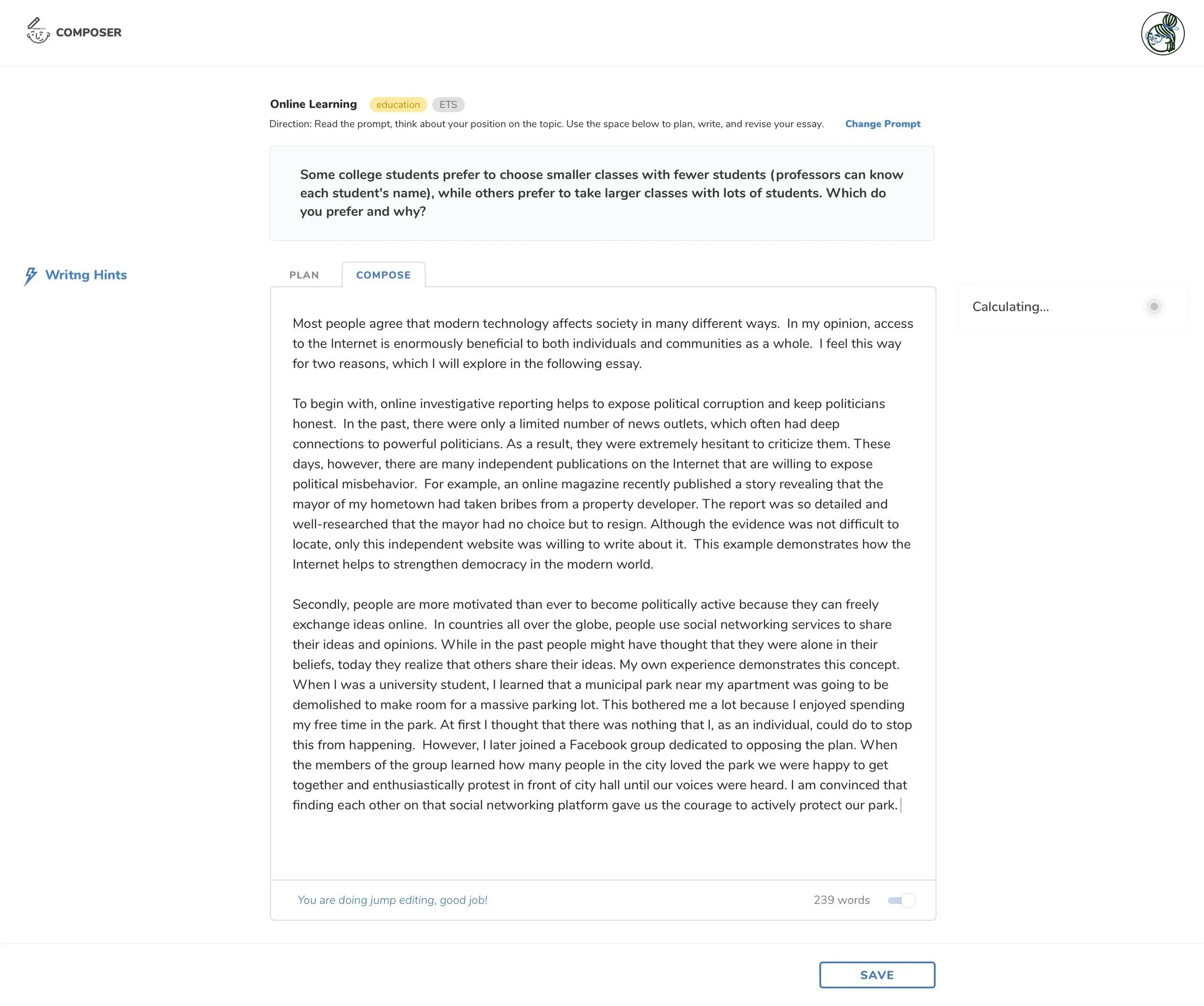

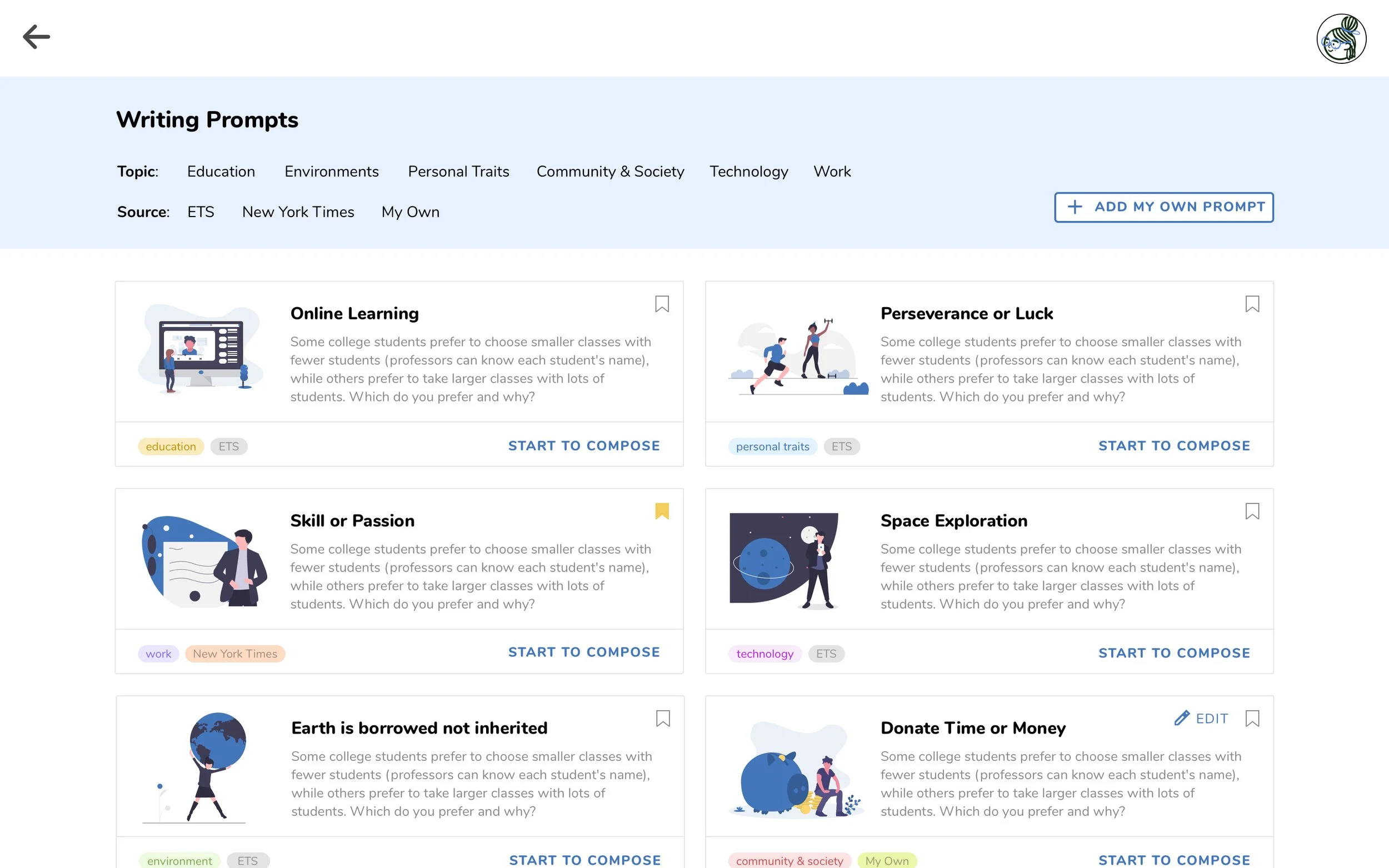

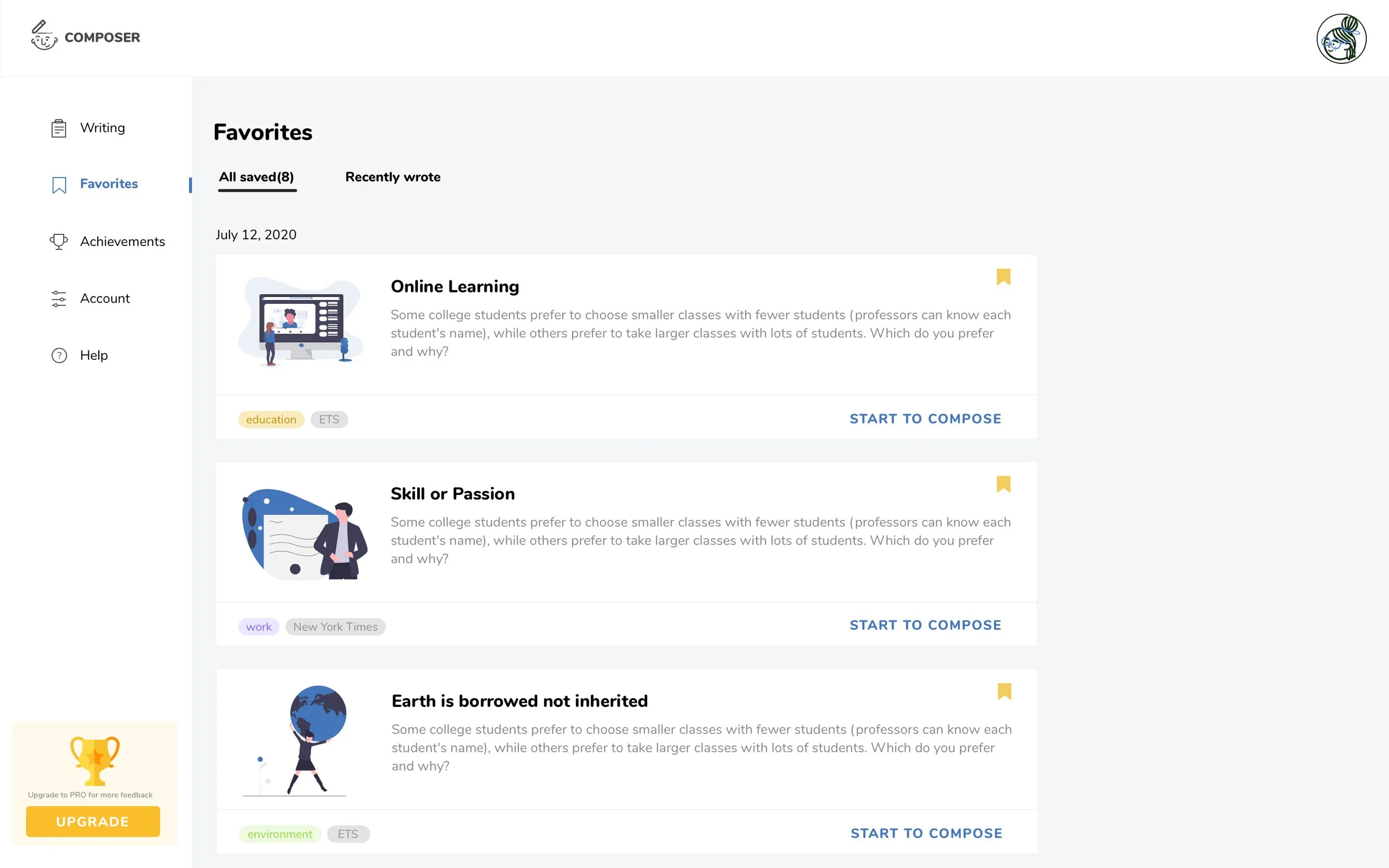

Lin was preparing for the TOEFL test, she wanted to practice English writing. Lin opened Composer and clicked “Start with a prompt” without hesitation.

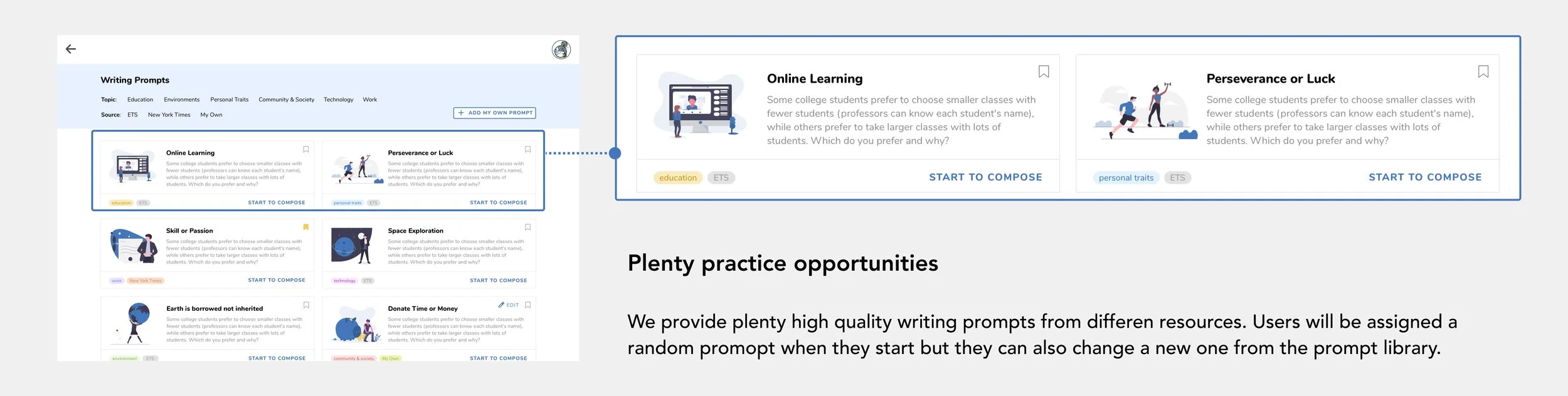

Lin was assigned a random prompt, she was happy that she didn’t have to select a prompt on her own. She took a look at the plan and started to draft the outline of the essay. She has been practicing academic English writing for a while, she understood how important and helpful the plan is for composing an essay.

Writing a plan is not too challenging for Lin, she brainstormed and typed some scattered words and sentences. She switched to the compose tab and was ready to start.

Lin started to write the essay based on her outline, sometimes she switched back to the plan tab and checked the notes. When she was typing, she noticed that when she was editing the sentences in different paragraphs, a notification appeared at the bottom saying “Good job, you are doing jump editing.” Lin was thinking, it looks like a good thing to do.

Lin continued typing but was stuck in the third paragraph, she clicked “writing hints” and clicked the example sentence to learn from some sample sentences. The writing hints helped Lin to learn some new words and writing directions.

Then Lin has almost finished her essay and she would like to check if there is any language feedback. She clicked “all comments” and found that she may need to revise something.

Lin reviewed again her finished version and clicked overall performance to check more details. In this report, Lin learned that her vocabulary diversity is low and she had some grammar mistakes. Lin also found that her typing speed is low. The report suggested Lin write down ideas more quickly and edit them in the end.

Lin noticed that the report recommended she try the Copy typing challenge. Lin decided to try this typing practice after dinner.

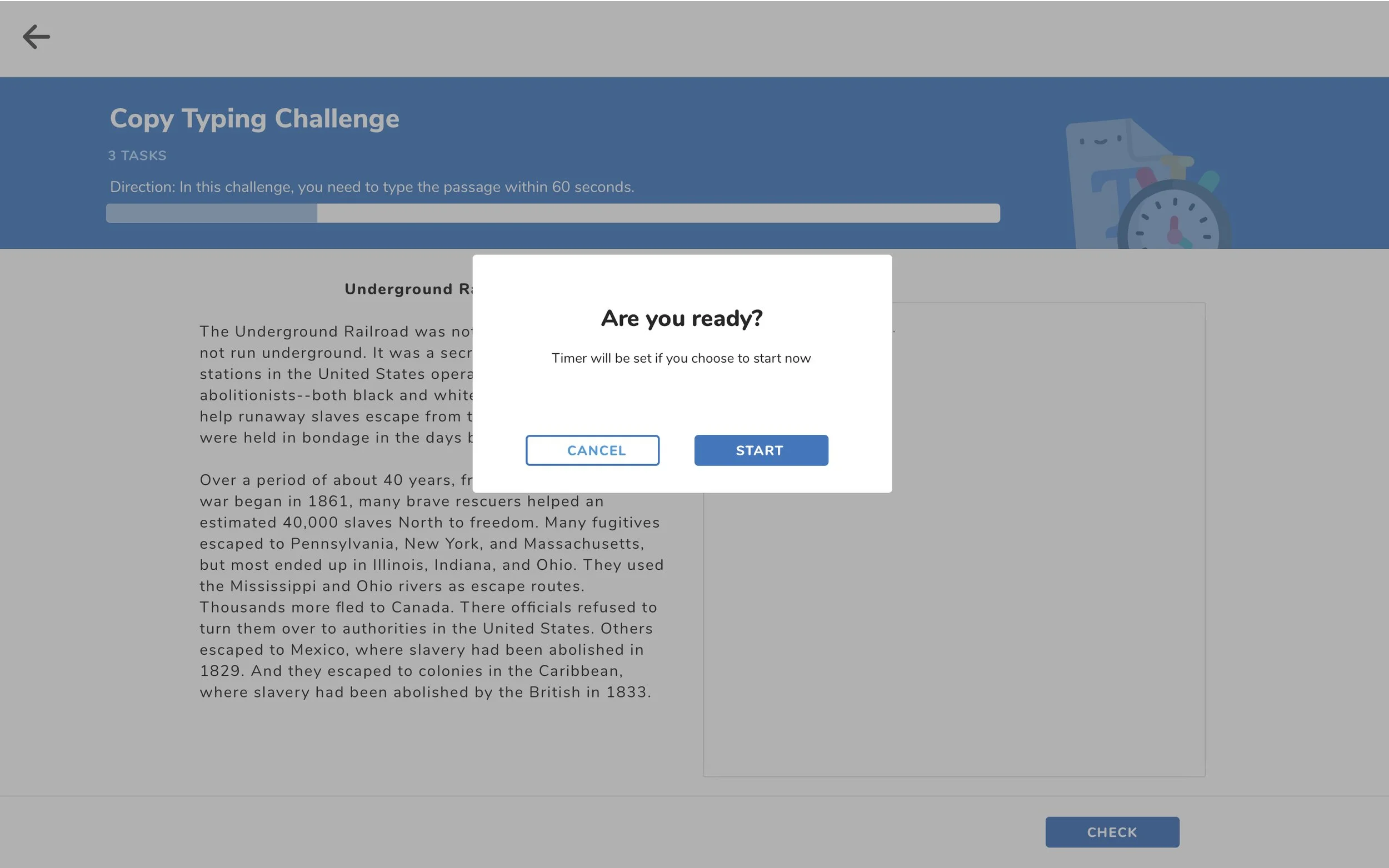

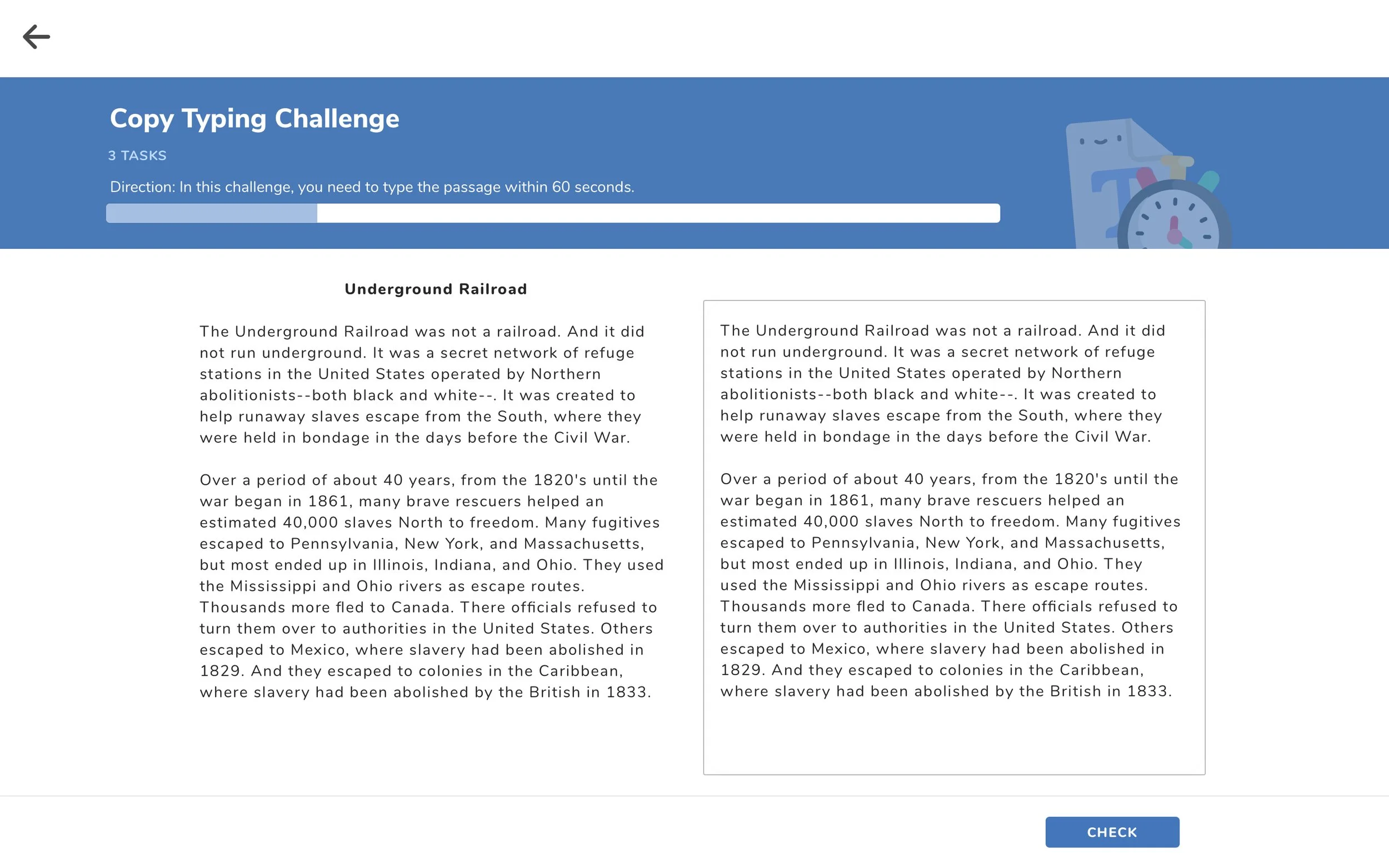

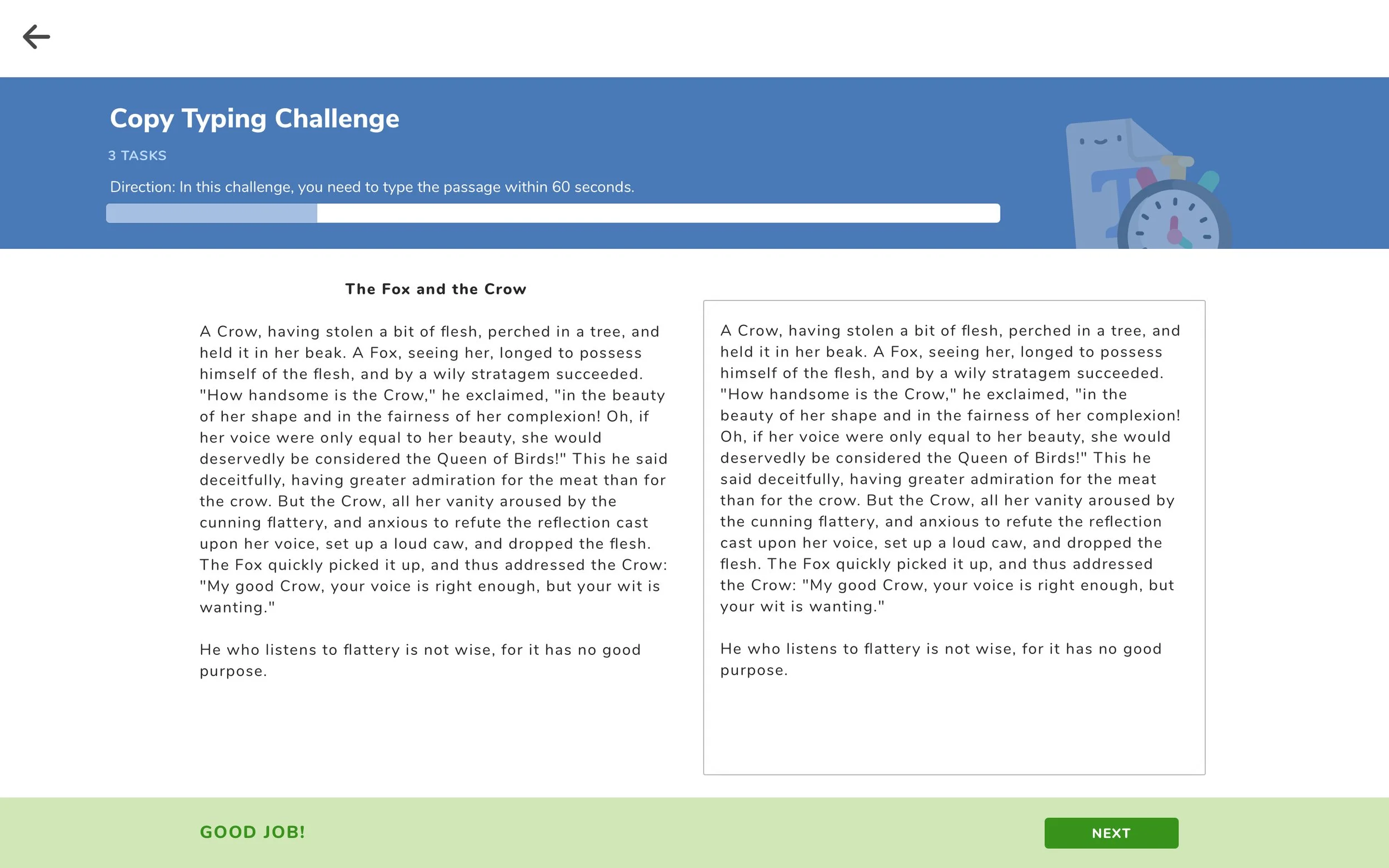

After dinner, Lin opened Composer again and started the typing challenge. Lin found that it is a very short and simple practice so she just started without any hesitation. She finished three tasks.

Lin found Composer very helpful and decided to practice academic writing once a week and writing challenges every day. After three months of practice, Lin got a high score in TOEFL and received an offer of her dream school.

impactPresented in the Summit

In October 2020, this project was presented in the ASU GSV Summit. After the conference, we received plentiful emails showing interest in collaborating with us.

ELAI ->